JavaScript for the modern web and good user experience

TLDR

- JavaScript helps us enhance user experience.

- JavaScript runs on user devices — but we shouldn’t abuse their power.

- Ship only necessary JavaScript and as little as possible.

- SEO & JavaScript boils down to prerendering, and then you are left with HTML.

- A user should be able to interact with UI when they see it, so reduce time between UI rendering and interactivity.

1. Web & JavaScript

Since Netscape introduced JavaScript in 1995, it has come a long way to becoming an indispensable tool for all modern websites.

We use JavaScript to enhance user experience and provide interactive interfaces; far more than we could before its introduction.

With the advancement of virtual machines and hardware, JavaScript runs seamlessly for the user and allows us (developers) to implement bespoke user interfaces and experiences on a whole new level.

Gone are days of building multiple desktop apps for each and every platform. Browsers have provided uniform platforms, and great core elements for building applications, games, and virtual reality experiences — in addition to the original purpose of browsing the internet.

2. Web apps vs. websites

I would like to take a minute and highlight that web apps and websites are two different things at their core.

Websites are content first and have a primary experience of sharing information, which would mostly be static by nature.

Web apps, on the other hand, provide interactive functionality first, which empowers users to achieve their task, e.g. CRMs, IMs, and webmail.

The alternative is to look at how information flows.

On one side, the user requests and consumes content, and they are in control of what they are viewing and at what pace.

On the other, the system is in control, and it needs to notify the user about new content as soon as possible.

With that in mind, we can conclude that it is in the best interest of websites to optimise content delivery so that a user can view it as soon as possible; the ideal time being less than a second from the user requesting content.

Web apps need to provide smooth experiences throughout their use, and users can usually tolerate slower loading times, as once loaded, most of the functionality would be available.

Not everything is black and white however, and there are sites that need to interact like apps (e.g. wikis, forums), or apps that need to appear like websites (e.g. online retail).

The key point is to achieve the balance between being fast and providing core functionality, with some concessions in between.

3. With great power comes great responsibility

JavaScript runs in the user’s browser, consuming both a device’s resources and power.

As more people are accessing the internet on mobile devices, this must be considered, as they have limited resources and power, and developers and website owners are responsible for what we serve users and how we utilise the resources a device provides.

It horrifies me when I see a website proliferated with ads, consuming 1GB of memory, which eventually crashes the browser tab.

Although there are different contributing factors to resource utilisation, here we are focusing on JavaScript and how it affects resource usage, user experience, and a site’s performance.

We will be looking at JavaScript delivery, loading and different frameworks in the wild, as well as how it affects SEO.

3.1. Startup performance

Even before JavaScript runs and affects user experience, it needs to be parsed and compiled.

We need to keep in mind that the more JavaScript we serve, the more time it will require for a browser to parse and compile it and more memory it will consume for compiled code.

There are a number of techniques browsers employ to speed it up, but, at the end of the day, parse and compile stage is crucial.

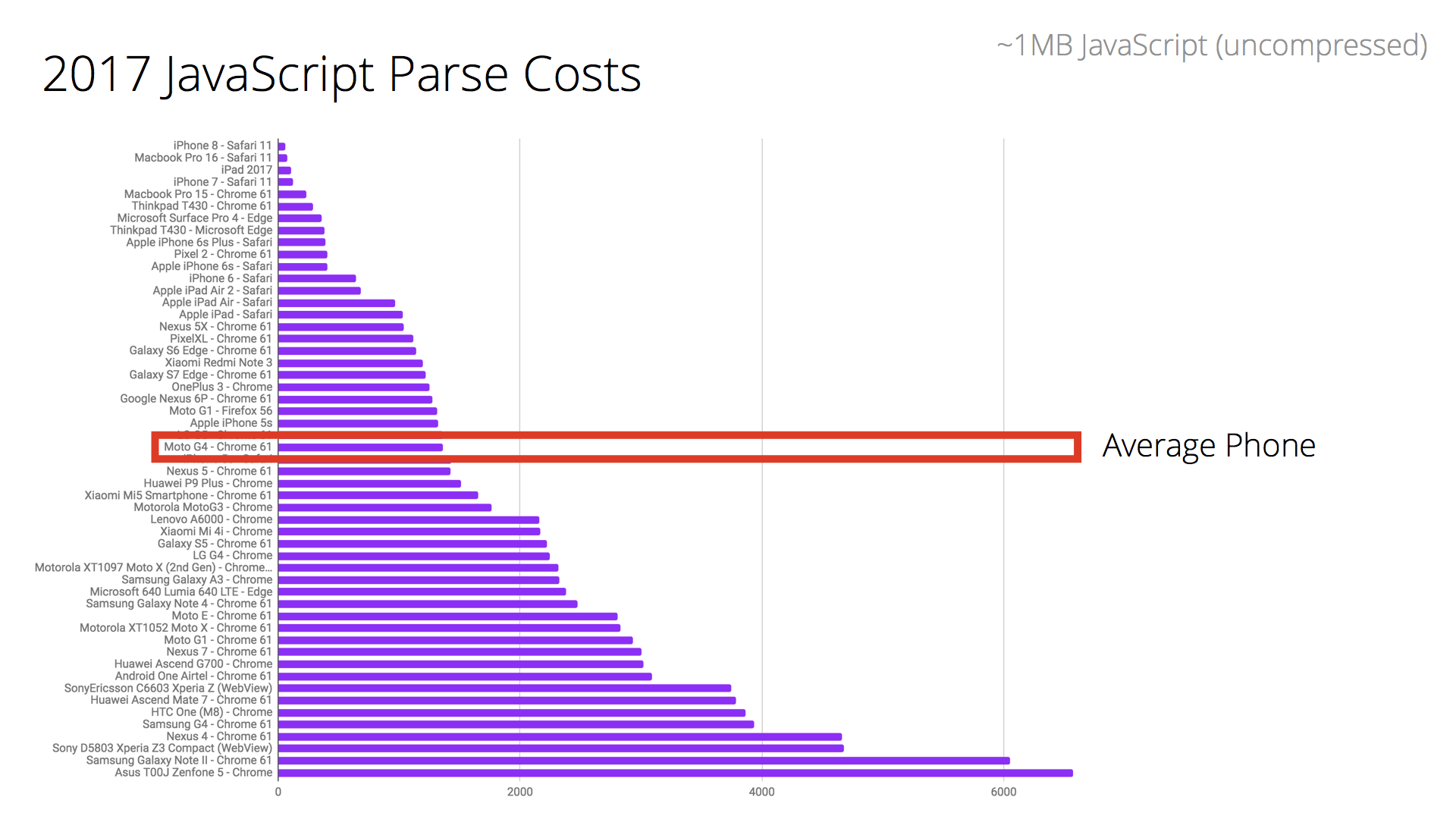

Even between phones, the time spent parsing and compiling JavaScript varies between two and five times between average phones and the latest high end models.

Read this post by Addy Osmani, going into minute details of JavaScript startup in Chrome.

Back in HTTP1/1 days, it was considered a good practice to bundle all your JavaScript and serve it from different domains (sometimes) to overcome HTTP1 limitation of one request at a time.

With adoption of HTTP2, we got ability to load multiple resources over one connection, which made using tools like RequireJS, import statement, or new dynamic import, more viable.

The issue is that each part is requested, loaded, and executed on demand, and that part might require another, which creates a sequence of request->parse/compile->execute iteration.

To achieve better start up performance, we need to minimise the amount of JavaScript we ship and balance the method we use to deliver it.

The ideal reality would be to use code-splitting and route-based chunking to produce one shared bundle that is required by all/most parts of the website, and one bundle per route/part of the website that covers functionality of that part only.

So, when a user navigates the website, they will take advantage of shared resources being cached, and reducing the amount of JavaScript per route will improve startup performance.

Using bundlers like webpack, parceljs, and fusebox, in conjunction with ES6 modules, allows us to reduce the JavaScript amount by removing a lot of dead code, and splitting it into chunks based on entry points and shared dependencies.

There is new Cache API available for caching request/response objects, which can be eagerly populated.

Progressive web apps use this API to provide offline experiences and to improve reliability under uncertain network conditions. This API can be used to improve asset fetching by precaching resources.

In our case, we can use it to precache additional JavaScript bundles that are required by other parts of the website, which user is likely to visit.

3.2 Third-party scripts and iframes

Including third-party scripts can be highly detrimental to overall performance.

Almost all websites have a number of third-party scripts, ranging from analytics and metrics scripts, to advertisement/retargeting scripts and social integrations.

Reducing this amount to the minimum can help with loading times and early event handler lag. It’s important to remember that JavaScript is single-threaded, so all the code runs serially.

Furthermore, iframes run in the same process as the main page, so that means that iframes can block your main thread and delay the startup time or lead to sluggish event handlers; degrading user experience.

Websites with tremendous amount of Facebook like buttons, embedded YouTube players, and other social widgets, visibly suffer from this.

The Guardian loads ~2MB of its own JavaScript, but by having a number of YouTube iframes embedded, it loads additional 13.73MB of JavaScript.

Bear in mind that our focus is on JavaScript, so browser cache and transfer size is immaterial, and what matters is that each YouTube player iframe loads ~1.37MB of scripts.

3.3. Popular frameworks

Almost every website or web app uses one or more frameworks. These frameworks add to the total script size. Here is a list of some popular frameworks and their sizes, with some of them having dramatically trimmed their weight over the years:

| Framework | Version | Size |

|---|---|---|

| Angular 1 | 1.6.5 | 165K |

| Angular 5 | ng cli 1.6.7 | 44K |

| React + ReactDOM | 16.4.0 | 94K + 7.1K |

| Ember | 2.18.2 | 476K |

| Vue (runtime) | 2.5.16 | 60K |

| Preact (+compat) | 8.2.9 (3.18.0) | 8.1K (9.3K) |

| require | 2.3.5 | 18K |

| jQuery | 3.3.1 | 85K |

| jQuery UI | 1.12.1 | 248K |

| Knockout | 3.4.2 | 59K |

These are sizes for minified core framework/library runtimes. You will be bringing additional libraries and increasing the total JavaScript weight by introducing them.

Now, you might ask yourself, do I need to bring Ember for a static website? What about React? Why can’t my simple website be composed using Preact? I have experience with jQuery, could it be sufficient?

Some of these frameworks are geared towards Single Page Applications and some are more general utility libraries.

When picking a framework, you need to keep in mind which problems it is better at solving, and how to utilise it to bring out its best qualities.

For SPAs, frameworks that manage whole DOMs are key. Using a framework like that, in the same manner, for building a regular website, is misusing its power.

Don’t use React/Angular/Vue to build websites, even if you can. That’s not what they are good at.

I am not suggesting that they are not a good fit, for as component centric frameworks, they actually fit very well for making interactive components that are added separately to the page to enhance experiences, for when:

- You have an online retail website, and you need a mini-basket on every page, make a static fallback that is overloaded and enhanced with a component once it is available.

- You need search box with suggestions (make a simple one, and enhance it with a component).

- You need a main hamburger menu on mobile, make CSS/HMTL only fallback first, and then enhance with a component (you will see why later).

Remember KISS? I suppose it has been out of fashion recently. When you choose tools for a job, ask yourself if it be simplified and still let us be productive? Does it deliver quality?

There are concessions to be made, but you must not assume that everyone has access to top end phones, or the best mobile networks.

4. SEO

How does JavaScript affect SEO? It doesn’t, or rather it wouldn’t have affected it at all if it wasn’t for proliferated use of client-side HTML rendering.

Search engines crawl the web by parsing and analysing textual content and making it searchable. Their primary interest is content. User experience, with regards to JavaScript, is not part of the search result or search query, and is of low interest, if any at all.

The problem with client-side HTML rendering is that the page de-facto is empty until JavaScript is loaded and run. So search engines cannot extract content. Check out this React SEO article by Reza Moaiandin, where he mentions why not all search engines render JavaScript, and what you must do when you use React and care about search engines.

It is costly and hard to run prerendering on a big scale. Check out this article, where Browserless discusses its experience in running 2 million headless sessions, and here, Ahrefs mentions that it believes that its customers are not ready to pay for prerendering on all websites.

At this point in time, only Google is officially rendering JavaScript, which takes time and makes the whole crawling and indexing process longer. Assuming that you are only interested in appearing in Google, should you rely on client-side rendering for SEO?

- Depending on network connectivity, users might have to wait longer than just initial page load to see any content, which the search engines take into account.

- Facebook and Twitter don’t render JavaScript, so you still need to prerender OGP and Twitter meta cards.

- Canonicals and other metas are still in effect, so you must not forget to update them using JavaScript if you are not prerendering them.

- Google renders web pages in a variation of Chrome 41 (as of 07/06/2018). It was released in March 2015, which makes it three years old. That means it does not support all latest shiny JavaScript features, which you might be using. Furthermore, certain features and behaviours are different from a real browser, for example timeouts fire immediately.

- Making sure it works in Chrome 41 would require polyfilling any features that it does not support. Does this not remind you of supporting Internet Explorer? Now, imagine supporting Bing’s version if/when they make it. What about Yandex? Baidu? NameTheEngine?

- JavaScript is a programming language that must be compiled to run; any syntax that is not valid for specific JavaScript version will crash compilation. Any use of runtime features that do not exist will prevent operation from completing at runtime. All of this means that unless it compiles and runs on that specific version (Chrome 41 for now), you will not be able to render your HTML, and indexer will not pick it up. If you must, you could always feed your JavaScript through a transpiler to spit out ES5 version of JavaScript and include polyfills, but then you are shipping more JavaScript. Consider prerendering webpages for search engines, using something like Rendertron, which works like a reverse-proxy.

To summarise, from an SEO perspective, if you encounter a fully or partially client-side rendered website and certain content is not indexed or crawled and you want to improve it:

- Is the client interested in other search engines apart from Google? If the answer is yes, there is only one solution, the website MUST prerender.

- Ask whether they can prerender HTML for everyone? If the answer is no, tell them that they MUST prerender at least for search engines.

- If the answer is still no, compromise on partial prerendering, but highlight the risks, that non rendered content might not be indexed.

Fun fact, although Google deprecated their AJAX Crawling scheme, other search engines still employ it, e.g. Yandex.

5. Usability

Ultimately, what matters is that a user can interact with the page. There is a metric known as Time to Interactive, which Google defines as:

Time to Interactive is defined as the point at which layout has stabilized, key webfonts are visible, and the main thread is available enough to handle user input.

I will go one step further and say that certain key UI elements that are powered by JavaScript must be initialised and ready to handle user input.

Here is quick TTI primer.

For the first page load, without primed browser cache, you should aim to be interactive within five seconds or less, for the second it should be two seconds or less. Webpage JavaScript powered UI should be rendered and ready for interaction. Strive to reduce time between UI being rendered and it becoming interactive.

On mobiles, with unpredictable network connection and highly variable performance, providing fast above the fold interactivity is crucial.

That means serving fewer overall scripts to make the main thread able to handle input faster; serving critical JavaScript first and possibly lazy-loading other scripts depending on UX.

Hambuger menus are the first UX elements that are susceptible to a “dead UI” effect, which appears when UI and page is loaded from user’s impression, but it is actually still loading and initialising.

Any attempt to interact with the UI element fails, and leads the user to believe that it is broken, which makes the site seem broken and results in the user abandoning their task.

The following table shows first paint, meaningful paint, and time when hamburger navigation becomes interactive (when event listener is attached). They were collected on desktop Chrome with mobile device emulation enabled, without throttling network or CPU.

| Website | Total JS (approx) size | Bundled | Time to first paint / meaningful paint | Hamburger TTI (cached) |

|---|---|---|---|---|

| Jaguar (Shop) | 5.72MB | Yes | ~500ms / ~830ms | ~2953ms (~2577ms) |

| Richer Sounds | 4.77MB | No | ~982ms / ~1574ms | ~8237ms (~5239ms) |

Both these websites were chosen because they use same platform, but have different ways of serving JavaScript, and both exhibit impactful dead UI effect.

As we can see, the experience of navigation on mobile is delayed by substantial amount of time.

In case of non-bundled delivery, the user is not given any feedback or indication that the page is still loading, as consecutive JavaScript is loaded asynchronously after main page load.

Serving critical UI JavaScript through a separate bundle and early in the resource load order can eliviate this issue. See Resource Prioritization, for more information on how Chrome prioritises requests and priority order can be instructed.

Summary

JavaScript is amazing tool for enhancing user experience, but as we move towards a mobile first (mobile most) future, it is paramount that we provide fast experiences compatible with majority of the devices without degrading low/mid end devices to second class.

It is necessary to ship a minimal amount of JavaScript in the right order to achieve quick load times and TTI.

With large variety of JavaScript framework, choosing right one for the job, has never been more challenging.

Balancing pros & cons, productivity, and experience is a good way to start when picking a framework.

But picking a framework when it’s not geared towards something, is a good way to shoot yourself in the foot later.

SEO and JavaScript comes down to one and only thing: prerender. It solves the whole issue and we go back to good old HTML.

Priority should be enhancing user experience rather then denying it due to obsolete browsers/devices. Serving as much as possible in HTML helps us achieve it.