React SEO, Universal JavaScript – Part 1

TL;DR

- Websites that are built using React.JS are perfectly SEO-friendly when built correctly.

- If you are going to build a website that renders the front-end using React.JS, then make sure you render the first version before serving it to the search engines.

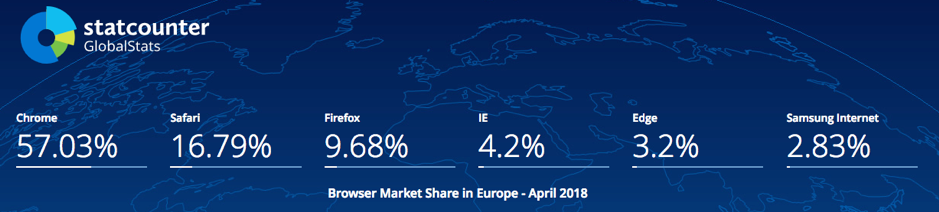

It is no news to anyone that old browsers which can’t render the latest standards of CSS and JS are almost dead now, having been replaced with auto-updating browsers that can render almost any type of application. With this change, companies such as Facebook and Google introduced frameworks such as React and Angular to advance and speed up web-based applications.

Although these frameworks are now over five years’ old (eight in the case of Angular), the adaptation rate of them has been very slow, due to both browser compatibility and an initial steep learning curve for some. This means that only the most advanced developers could pick up the frameworks and quickly be able to produce a usable product.

Now that some time has passed, more and more resources are available for juniors to learn about and therefore be able to use these frameworks. Which in turn means we will start to see a rapid increase in the number of websites using these as their core frontend frameworks over the next few years.

In this article I am going to explain the good, the bad and the ugly of React.JS for Search Engine Optimisers and developers. I will start off with the basic questions and then go into further detail. Please don’t hesitate to get in touch with me if you have Angular JS related SEO questions.

Basic questions answered

Is there such a thing as an SEO-friendly React.js webpage or web application?

Simply put, yes — but given their resource-heavy integrated structure it does mean that search engines which support React natively will need to use more resources to render such applications or websites… hence the answer won’t be as simple as just a straightforward yes.

Which search engines can support React.JS webpages natively?

As a rule of thumb, I would suggest that you pre-render or use isomorphic JavaScript techniques for the moment for all search engines. I have, however, broken down each search engine and their abilities in the table below:

| Search Engine | Core Target Language | Basic JS Support | Full JavaScript Support (e.g., React) |

| International | Yes | Partial | |

| Bing | International | Yes | No |

| Yandex | Russian | Basic | No |

| Baidu | Chinese | Basic | No |

| Naver | Korean/Japanese | Basic | No |

| Daum | Korean | Very Basic | No |

| Seznam | Czech | Very Basic | No |

I have not listed meta search engines such as DuckDuckGo and Yahoo as they are powered by bigger search engines such as Google and Bing.

Based on the table, you should not relay on native support just yet or, in fact, for many more years to come.

With all this advancement, why don’t search engines always want to fully support JavaScript?

Cost. If we go back to basics, search engines use servers to crawl websites. Back in the day, search engines used to just read the code (response from the server) and analyse the data and links within it. As time passed by, search engines also started to run the JavaScript code as the page content loaded without rendering it visually so they could discover more actions and links. This may take up to three real time seconds and less than one second of CPU time.

Now, if they are to render each page fully this means that they are most likely to spend two to three times that amount of time to analyse each page and will be required to use a much larger amount of memory.

Currently it is estimated that Google has just over 3 million servers running at any one time. If Google were to move onto full rendering, the number of those servers would have to at least double. The cost of doing that is not cheap and also not environmentally friendly ‘til we have faster hardware and software rendering engines.

Pre-rendering and Isomorphic JavaScript

Now that we know why search engines are not very happy about fully rendering JavaScript, what is the solution?

We need to make it easy for search engines to crawl our websites. In order to achieve that we can take multiple routes; one is to pre-render our webpages and server the static content, and another is to go for a middle way approach of Isomorphic JavaScript, which is also known as universal JavaScript. I prefer the latter as it significantly improves the user experience.

What is the difference between pre-rendering and Isomorphic (Universal) JavaScript?

To offer up a fair comparison, it would be best that I explain the similarities between pre-rendering and Isomorphic JavaScript first. The aim of both is to serve digestible HTML content to crawlers and browsers.

By default, React.JS pages can be just a single line of HTML in the body which acts as a template code that is then filled with multiple nested content as the content is pulled from the server and rendered on the client site (user’s browser or crawler).

In order to send digestible content, both methods use an intermediator server resource to pull the requested content and modify the HTML content so that they are sent to the user as the final state content rather than a template code. This means that the content served will be fully expanded HTML which will contain content and links — both of which crawlers love and understand. They will then run any further simple JavaScript and CSS to understand page structure changes.

The difference between pre-rendered serving and Isomorphic comes at the final stage. When a page is pre-rendered and served any further interaction with links or the page is not necessarily a known state to the JavaScript that is served with the page. For example, if a link on the page is clicked, we are most likely to be sending a full-page request update to the servers and back to the user.

When we serve an Isomorphic JavaScript webpage, we also attach listeners to the HTML DOM content — this simply means that the page is straight and very interactive, and only required content that need changing will be updated. For example, if a link on the menu is clicked, the application would only change the main body page content and not the header and menu, and hence less resource is required from the server. Also the user will have a much better interaction with the page.

Below is a screen capture of an Isomorphic webpage before JavaScript is disabled, and then a screen capture with JavaScript disabled (similar to a web crawler).

In the video above, as you can see on the network connections on the left, once the page loads most of the content on related pages slowly loads after allowing users to see instant changes.

Once the JavaScript is disabled in the video above, as you can see, all the content on the page still loads and on every click a new page is loaded up with the content within it. This means that the crawler is capable of seeing all the HTML content without any JavaScript enabled and will consume the content.

Why would I want to render React.JS components on the server before they are sent to the user?

We can break this down into two aspects: SEO and User Experience.

Benefits of pre-rendering React.JS component for SEO

As explained earlier in this article, we simply cannot rely on search engines to crawl non-pre-rendered React.JS webpages correctly. The native response code at this moment is not very digestible and too heavy to process for search engines. I believe it will be a fair few years before they will have full JavaScript support.

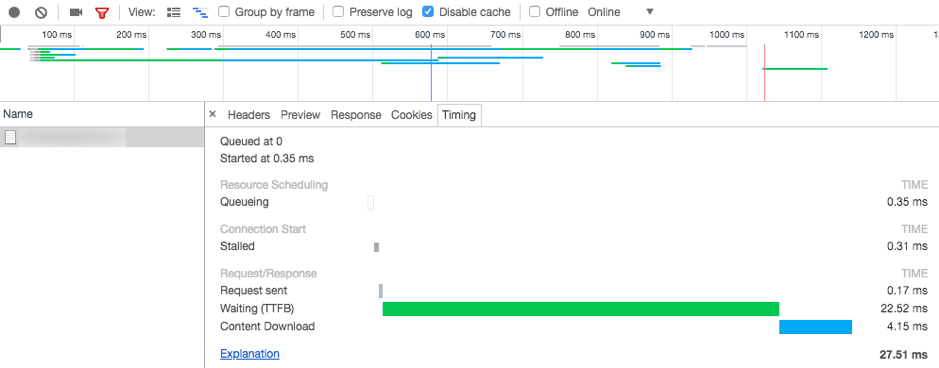

Another reason for pre-rendering the content is speed. If the architecture of a website is done correctly, the pre-rendered content can be easily cached and served to the crawlers extremely quickly. If that is further cached behind CDNs with good networking routing, we are likely to serve content to the search engines under 50ms— something that search engines favour.

Benefits of having pre-rendered components for users

The first and most obvious reason behind this is to improve compatibility with older browsers, if that matters.

The second reason is speed. In research done by Google previously, it was discovered that mobile users are most likely to leave a website if it takes more than three seconds to load.

Pre-rendering components will reduce complete load time significantly as they are generated on the server side on more powerful hardware. The latency time is also reduced as the server is most likely to be closer to database servers, etc.. This also helps those on less powerful devices to not have to render all their pages client-side.

Are there any good examples of Isomorphic React.JS webpages?

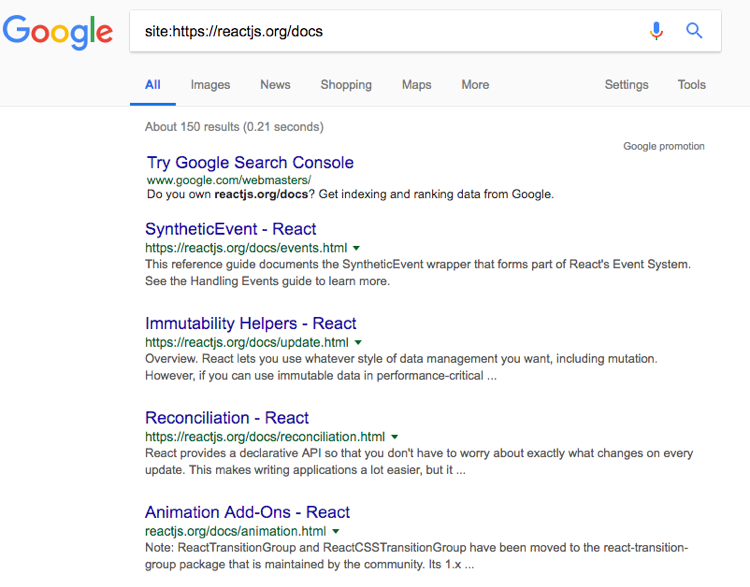

There are plenty of good examples out there. React’s own website is a great example of an Isomorphic webpage — all the document pages are isomorphic and indexed very well, with all content visible to Google.

React has been very good and released their documentation’s source code as well, which can be explored or used as a template to begin with.

Currently the React.JS community is working hard to build and release more open source examples. Meanwhile, I would recommend looking at this WordPress React Template. I will update this post once better open source templates are available to work from.

Common React.JS SEO issues

Below are some of the common issues SEOs face when working with React over time. For the moment I have included one of them, and I will add to this over time.

Please get in touch with me if you have further questions and problems that you commonly face with regards to React and SEO.

React application seen as a blank page via “Fetch as Google”

If the Screenshot on “Fetch as Google” is empty and you have taken all the correct steps of implementing a good pre-rendered webpage, it is most likely that Google’s screen capture system hasn’t been updated yet. To check if that is the case, click on the URL that you have fetched and check the “Fetching” section of the loaded page. In there you can view the response code of the page. If the response code in the HTML is as expected you do not have to worry — in that case, perhaps attempt to fetch as Google at another time.

If the HTML code, on the other hand, is just React template code or unexpected, then the problem is most likely to be from your side.