A guide to planning downtime for your website

This guide is to help those who want to make sure their website downtime does not impact on their organic search performance.

What is website downtime?

The term downtime refers to a period of time when a website is unavailable or offline.

Although it is common for downtime to be a planned event for routine maintenance, it can occur due to an unplanned event, such as a server outage or malicious cyber-attack.

Does downtime affect organic search performance?

Just like most SEO advice, the answer to this question is that it depends on the amount of time the website is offline.

According to Matt Cutts, as long as the downtime does not happen over a long period of time, it shouldn’t affect your web rankings in Google.

That said, many Googler’s have highlighted that downtime does not automatically mean that Google will drop pages from its search results.

However, it is important to understand that the best way to make sure that downtime does not impact on rankings is to configure it properly to tell search engines the website is temporarily unavailable.

How to configure SEO friendly site downtime

To make sure your website’s downtime does not impact on your organic search performance, please follow these steps.

1. Tell search engines your site is temporarily unavailable

To inform search engines that the server they are accessing is temporary unavailable, you need to set the HTTP status code to a 503. The official definition of a 503 HTTP status code according to the RFC is:

The server is currently unable to handle the request due to a temporary overloading or maintenance of the server. The implication is that this is a temporary condition which will be alleviated after some delay. If known, the length of the delay MAY be indicated in a Retry-After header. If no Retry-After is given, the client should handle the response as it would for a 500 response.

When a search engine tries to request a URL on the website, it should return a 503 HTTP status code for the duration of the downtime.

In conjunction with the 503 HTTP status code, you should also implement the Retry-After response-header field. The official W3C definition of the Retry-After response-header field is:

The Retry-After response-header field can be used with a 503 (Service Unavailable) response to indicate how long the service is expected to be unavailable to the requesting client. This field MAY also be used with any 3xx (Redirection) response to indicate the minimum time the user-agent is asked wait before issuing the redirected request. The value of this field can be either an HTTP-date or an integer number of seconds (in decimal) after the time of the response.

An example of how this should appear in a HTTP status code response looks like:

Retry-After: Fri, 31 Dec 1999 23:59:59 GMT

Retry-After: 120

If you know how long your site would be down for, you can set the Retry-After header value to when the server is back online.

Just be aware that if your downtime goes on longer than you expected, update the Retry-After value, otherwise Google may waste its resource on crawling your website at the incorrect time.

2. Custom error message

Just like a custom 404 page, it is important to provide a custom page for users when implementing a 503-status code.

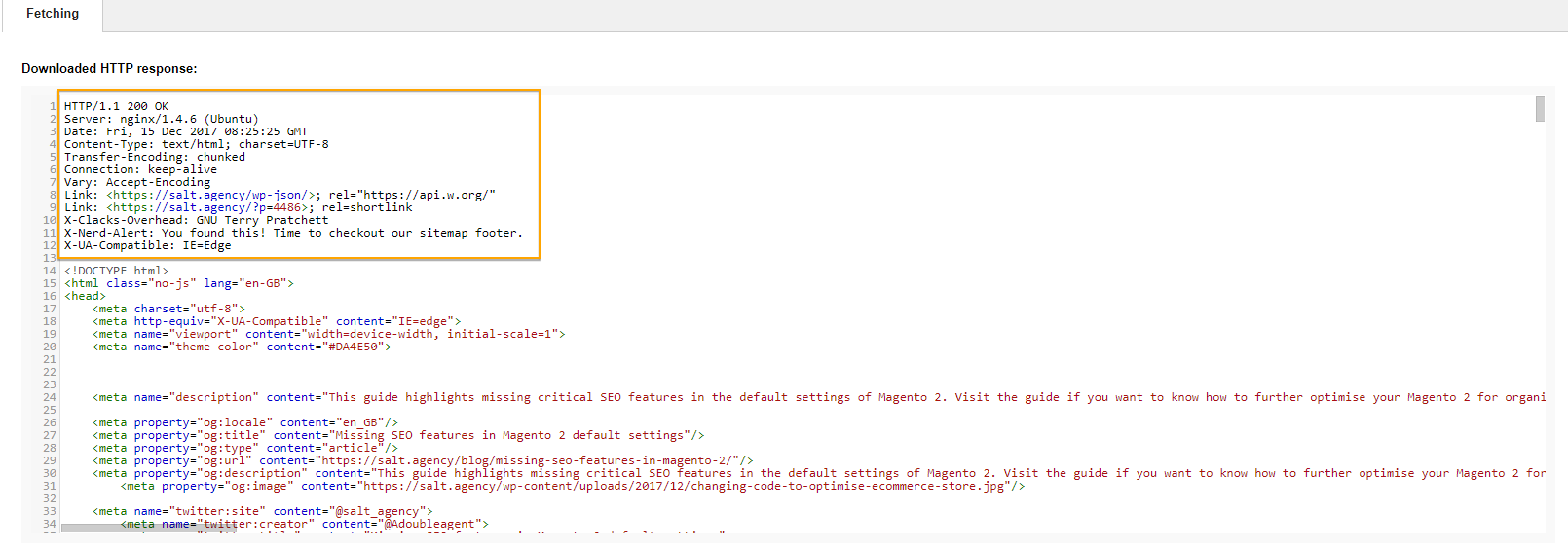

3. Use fetch as Google to test HTTP header

Remember to use the Fetch and Render tool as Googlebot and Googlebot Smartphone to make sure that the 503-status code is being served to Google’s crawler user-agents.

If you’ve also used the Retry-After header, please also check the fetching tab in Fetch and Render as Google and review the response header from your pages. It should appear in the tool:

4.Stop Google completely crawling your domain with the /robots.txt file

It is not widely known, but if a /robots.txt file does not produce a 200 or 404 status code, then Google will not crawl your website at all.

Pierre Far explains in this Google+ post that if the /robots.txt file does not produce an acceptable status code (200 or 404), then bots will stop crawling the website altogether.

If you change the robots.txt file status code to 503, then it will stop Google from crawling your website until the planned maintenance is finished.

Pierre also highlighted that webmasters shouldn’t block the site using a disallow rule as this could cause crawling issues and impact on the recovery of the crawl rate.

5. Telling search engines your site is back in business

After the website downtime has been complete and the site is pushed live, make sure that there are no issues or errors by running a crawl of the website.

It’s always a good idea to run a health check of the website using a third-party crawler after a website has had planned maintenance to make sure old code has not been pushed live or if the pages are not indexable.

6. Monitor crawl errors in Google Search Console

After a website has had some downtime, it’s always best to pay extra attention to the crawl error report in Google Search Console.

If you see an increase in internal server errors or a spike in 404 errors, this could be an indication that you need to review your website.