Top tips for improving index rate and URL crawling

There are 130 trillion pages held within Google’s index, which means that when someone performs a search, an enormous bank of information must be analysed in order to deliver the most relevant results to the user.

When new content is created however, it won’t appear in the search results straight away, as Google needs to crawl your site first, and secondly decide whether it’s worth indexing or not.

How often a search engine crawls your website depends on a range of factors, but there are things you can do to get crawled more often so that you can improve indexing.

Ensure crawlers can access your site

To make it even possible to get pages indexed, it is incredibly important that search engines are able to crawl your website.

Each site has a limited amount of crawl budget, so it is important that Google and other search engines can crawl your site effectively.

If your pages load too slowly, it could cause crawlers to spend too much time on elements such as large images and files, which might hinder a bot’s ability to find other important links to newly created pages.

Furthermore, if there is duplicated content on your site, it could confuse bots and delay the rate that they crawl your site; a symptom of a much bigger issue.

If you have duplicated content on different URLs, you should consider their removal as you will be wasting important crawl budget.

It’s worth regularly checking Google Search Console to see if there are any crawl errors on your site. Look through this regularly to find new (and old) page errors and fix anything that is flagged to ensure efficient crawling.

Add your sitemap to Google Search Console

Automatically updating your HTML sitemap, and making sure your XML sitemap is frequently submitted to Google Search Console will help with your site being crawled.

What’s more additional information about how Google crawls your website will be available.

For example, if you have a properly categorised sitemap (a sitemap for products, categories, videos, pages, blog etc.), and you notice one area is not getting indexed as much compared to the others – you have something that you need to investigate.

Find out more about building a good sitemap in this Google guide.

Use the URL Inspection tool

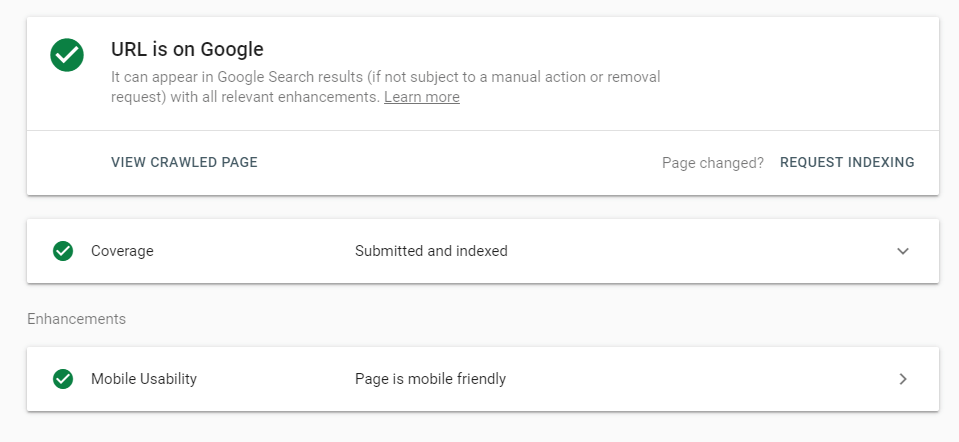

Using the URL Inspection tool in Search Console (previously known as Fetch as Google), you can submit individual URLs to the Google Index, which is best used when you only need to submit a small number of URLs.

To do so, simply inspect the URL using the URL Inspection tool and select “Request indexing” so that you can run a live test on the URL.

You shall also be notified about whether there are any obvious indexing issues. Given that there are no such issues, the page will be queued for indexing.

You can also submit up to 10,000 URLs a day to Bing via its Submit URLs tool on Bing Webmaster Tools if your site has been verified for longer than 180 days.

Block access to unwanted pages

As already mentioned, each site only has a certain amount of budget, which means that it’s important to stop crawlers working through pages that you don’t want to be indexed.

For example, it might be necessary to block access via robots.txt to printable pages, thank you pages, or parameter pages.

It’s worth noting however, that these pages might still appear in search engines if they have been linked to elsewhere.

Therefore, if you want these pages permanently deindexed, you should add a <noindex> tag to the page or a <canonical> tag.

SALT.agency has written about why and how to stop search engines from accessing certain pages, here.

Link from key indexed pages

By providing links to new URLs from existing pages that are already indexed, will help Google discover them automatically.

How efficiently it does this, however, depends on the quality of your site’s architecture.

It’s also worth noting that if Google happens to find a new link within an old page, it will probably crawl that page more often.

With this in mind, you could add new links to older pages to help new content get discovered sooner.

Similarly, if an existing URL is recrawled, and it redirects to a new URL, the new one will be crawled.

Ensure that your meta descriptions and titles are descriptive

As well as content, it is also integral that other aspects of your site are unique, such as meta descriptions and titles.

If a Google crawler was to see numerous meta titles and descriptions that are duplicated, it could assume that the pages themselves are duplicated.

Search Console will also highlight any pages that have duplicated meta tags, and you can also use tools such as Screaming Frog.

Deal with them as soon as possible so that you can improve the overall crawling of your site.

If you want to know more about how indexing can help your site, or anything else mention in this post, check out our contact page and get in touch.