Does Google’s cache date matter for SEO?

Around the time of the deindexing bug earlier this month, a large number of websites saw their homepages (among other prominent traffic driving pages) seemingly disappear from Google’s index without warning or explanation.

Before long of course, webmasters started to notice another trend, that the cache dates of websites within Google’s public cache had seemingly not updated since 2 April, with a large number seemingly held around 31 March.

At the time of publishing this post, that is a lead time of 9-11 days between cache dates.

Although this is an anomaly, it’s worth noting that the cache isn’t updated on a daily basis, and having gaps between cache refresh dates (the public cache that we can see) is normal — all sites being held around those dates isn’t normal.

We can speculate as to why Google may not have updated the cache, given it’s timing with the deindexing bug, and consider whether or not Google has performed a rollback to a previous stable version.

We can also address whether or not Google’s public cache is useful to SEOs — and if we should care that there is an apparent cache bug, or rollback.

Common issues found in the cache

If this is a bug with Google’s Cache, then it’s not on its own.

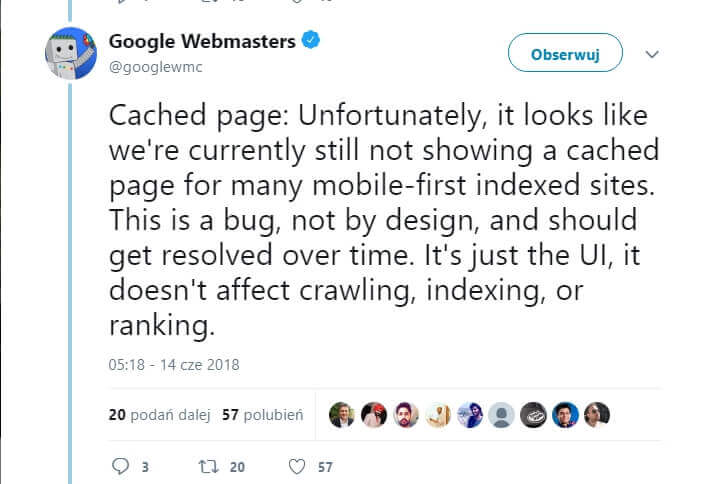

Back in 2018, when Google was moving websites over to the Mobile First Index, the cache encountered issues, and publicly disclosed them as a bug:

Aside from this, there’s often a number of issues that webmasters identify within Google’s cache, although they’re often not issues with the cache directly.

Some of these can be down to technical issues with the website itself, and sometimes down to Google deciding not to cache a page.

Some of the more common cache questions raised on both forums and Twitter include:

Cache date doesn’t equal crawl date

Using a high authority website with seven figure monthly organic traffic as an example, the last cache date displaying is 31 March (along with what appears to be a very large number of websites):

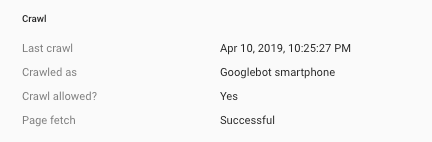

Whereas for the same URL in Google Search Console, the last crawl date was ~24 hours ago:

So why are these dates different? As explained by John Mueller on Twitter in August 2017:

The cache is kinda separate, so it’s not indicative of what we index & rank.

Similarly with another lower authority website, the last crawl date for their homepage is 10 April, and the last homepage cache date 1 April.

However, major content changes were published on the 10 April (prior to the last crawl time), and we have seen some ranking fluctuations off the back of it, so Google is clearly recognising changes.

Google doesn’t store a cache for all the pages it visits

It’s also common to see a cache error when trying to view the public cache, especially for new content or very low authority/traffic websites.

The reason behind this again comes from John Mueller on Twitter:

We don’t cache all pages that we index, so that can happen. Sometimes it takes a while, sometimes we just don’t cache it at all.

It’s also possible to prevent Google from caching your page by using a page level robots tag declaring nocarchive.

Because there is a separation between Google’s Cache and how the search engine chooses to index and display search results, it makes sense that it won’t store and cache every webpage in existence — that costs money, and Google isn’t a charity.

The cache being empty

This is a common issue with JavaScript powered websites, as they can sometimes not serve content for Googlebot and other crawlers.

This problem hasn’t gone unnoticed, and Martin Splitt has been tasked with helping increase the amount of information for webmasters in how Google handles and processes JavaScript.

When is it worth checking Google’s cache?

Google’s Cache does give us a lot of information, but it’s not always actionable and can often lead analysts astray.

That being said, I do check the cache when reviewing websites, especially if they use a lot of JavaScript technologies, but rather than see the anomalies within the cache as issues, I see them as potential symptoms of other underlying technical problems.

JavaScript problems

In the case of a number of JavaScript powered websites, the cache appears as empty.

This indicates that the website may not be utilising best practice guidelines and serving content for search engine user-agents, using technologies like pre-render or server-sider rendering, or even rendering cached versions of the website through a CDN like Cloudflare and Cloudflare Workers.

However, if the JS website has a cache view, I know that they are serving content for Google’s user-agents.

Content duplication issues

This has the potential to happen a lot with international websites (with multiple versions of the same language targeting different countries), and large e-commerce websites with a large number of SKUs.

The symptom of this is opening the cache to see a different URL to the one that you expected to see.

You can also view this issue through the new Google Search Console and the Excluded URLs report, as it’s likely to have been flagged as a duplicate, and Google selecting a different canonical to the user, meaning you need to investigate why.

Ultimately, you have to put in place recommendations to improve the uniqueness of the content and give Google a reason to perceive user value and index it.