No Penguin recovery? Maybe links weren’t the issue…

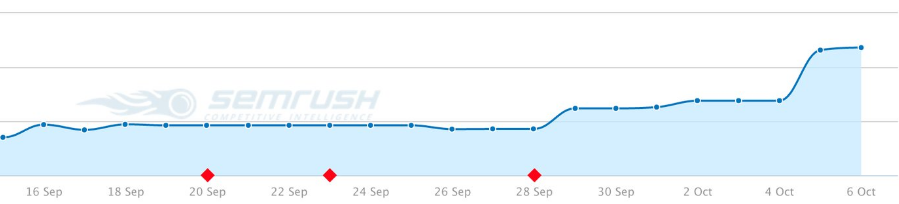

In recent weeks, the much anticipated Penguin 4.0 update has stolen the industry spotlight, and perhaps rightly so, given the impact we’ve seen with the new real time algorithm. But what if your site hasn’t recovered in this new post-Penguin world?

Links are only a piece in the SEO jigsaw and while the importance of the piece is contested in forums across the internet, the fact that other pieces play a part is undeniable. September not only saw the Penguin update, but also the Possum update and a quality update (another Phantom).

It is not the job of search engine optimization to make a pig fly. It is the job of the SEO to genetically re-engineer the Website so that it becomes an eagle. – Bruce Clay

When I used to work client-side, I’d sit in pitches where SEOs would lead with links and link outreach strategies early in the meeting, saying how acquiring more links will help improve rankings. This is without having access to Google Analytics, Search Console, or even a thorough look at the website’s technical structure.

After reading a number of Penguin recovery stories in the past week, I’ve also seen the other side of the coin, websites who either a; haven’t made a recovery, or b; have rested on their laurels and the sites around them have recovered, changing the SERP landscape.

One of the hardest conversations to have in SEO is to tell a client (or potential client) that their website isn’t good enough, and that it requires extensive technical work and web development to bring it up to meet SEO best practice guidelines. Unfortunately no matter how many links you throw at something, if it’s not technically good then it won’t work.

Quality updates

In January this year, Google incorporated Panda into it’s core algorithm. Making a site ‘Panda-proof’ for some is just about ensuring that there is enough content on a given page to prevent it from appear as thin in the eyes of the algorithm. While this is true, it’s not just about the length of content, it’s also about the quality of the content.

Google determines quality using a mixture of it’s algorithms, Rank Brain and the Google engineers who manually review SERPs (and adhere to the Search Quality Evaluator Guidelines). Google spokesperson Gary Illyes has also confirmed via Twitter that Panda adjusts the ranking of individual pages based on the quality of the site as a whole:

@rustybrick anyway, panda adjusts ranking of pages based on quality of the site.

— Gary Illyes (@methode) October 7, 2016

It’s important that the content on your site is of a high enough quality for your sector and your audience, and contains all the relevant information that Google would expect to see. If your page is about car repair and replacement parts, Google may expect to see part numbers and part names being used, perhaps even a table of other compatible parts for the car.

Crawlability and indexing issues

When Google crawls your domain, it doesn’t (always) crawl every page. It’s important that you understand your domain’s crawl budget and how efficiently the budget is being spent.

Previously SEOs could use PageRank as an indicator of ‘crawl budget’, as the higher the PageRank, the more likely the page was to be crawled by Google. This changed when Google introduced the Caffeine update, when its engineers also introduced the Percolator so they could index larger data sets at a time.

It’s not uncommon to come across ‘bot traps’, pages, or PDFs, where there is no link to the main site for the Google-bot to follow. You could also be wasting your crawl budget through unnecessary and excessive internal and external links, such as faceted navigations on ecommerce sites or having a high volume of external links on a single page.

Understanding your crawl budget and how making site wide changes, such as moving from http to https can affect your site and your index, is important.

You’ve not replaced your links

When you’re hit with a Penguin penalty, you feel it. Even if it’s just a partial penalty for overcooking the anchor text for specific phrases, you feel it. So what do you do? You bring in an agency to remove the penalty, the disavow is put together and they submit it and Penguin 4.0 updates – but you haven’t seen any recovery.

When a domain is hit by Penguin, all the effort and focus is put on removing the negative links, and it’s assumed that once the negative links have been removed that the domain (and it’s rankings) will spring back. This isn’t always the case, especially if the site’s backlink profile wasn’t extensive and strong to begin with.

It’s important that as you’re removing spam links from your backlink profile that you’re replacing them with genuine backlinks gained through PR.