Grounding queries vs fan-out queries: The two hidden layers of AI visibility

AI search is not simply a new interface layered on top of traditional ranking systems. It represents a structural shift in how answers are constructed, how brands are surfaced, and how visibility is measured.

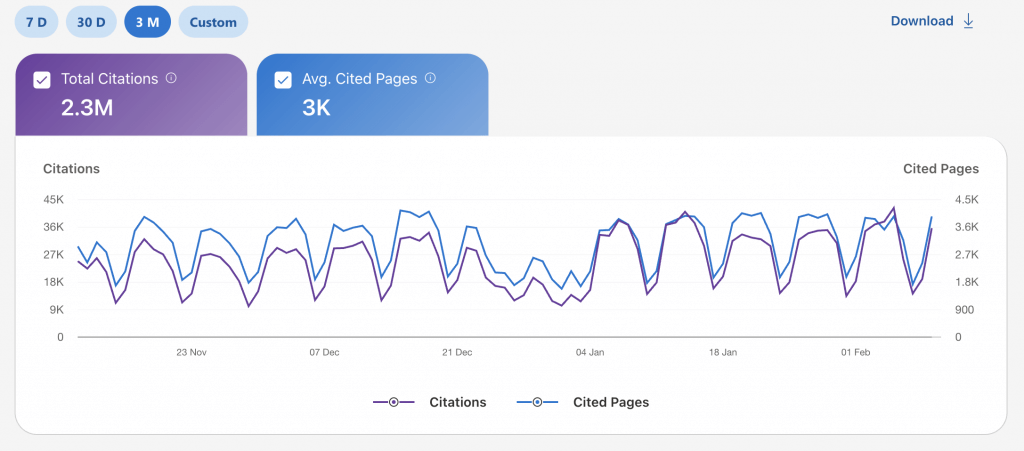

With Bing now exposing AI performance data inside Bing Webmaster Tools, the industry is being forced to confront a more complex reality in which visibility in AI environments is no longer synonymous with ranking positions, but is instead shaped by two distinct yet interconnected mechanisms known as grounding queries and fan-out queries.

Understanding the difference between these two mechanisms is not academic, because they directly affect how travel brands build destination authority, how SaaS companies construct documentation ecosystems, and how ecommerce businesses structure product information. If teams fail to distinguish between grounding and fan-out, they risk optimising for the wrong layer of AI discovery.

| Mechanism | Explanation |

| Grounding queries | Grounding narrows the answer by pulling in trusted, verifiable sources before AI responds, reducing uncertainty and increasing factual confidence. |

| Fan-out queries | Fan-out widens the answer by exploring adjacent variations and comparison criteria before AI responds, expanding the scope of evaluation and discovery. |

What is the difference between grounding and fan-out?

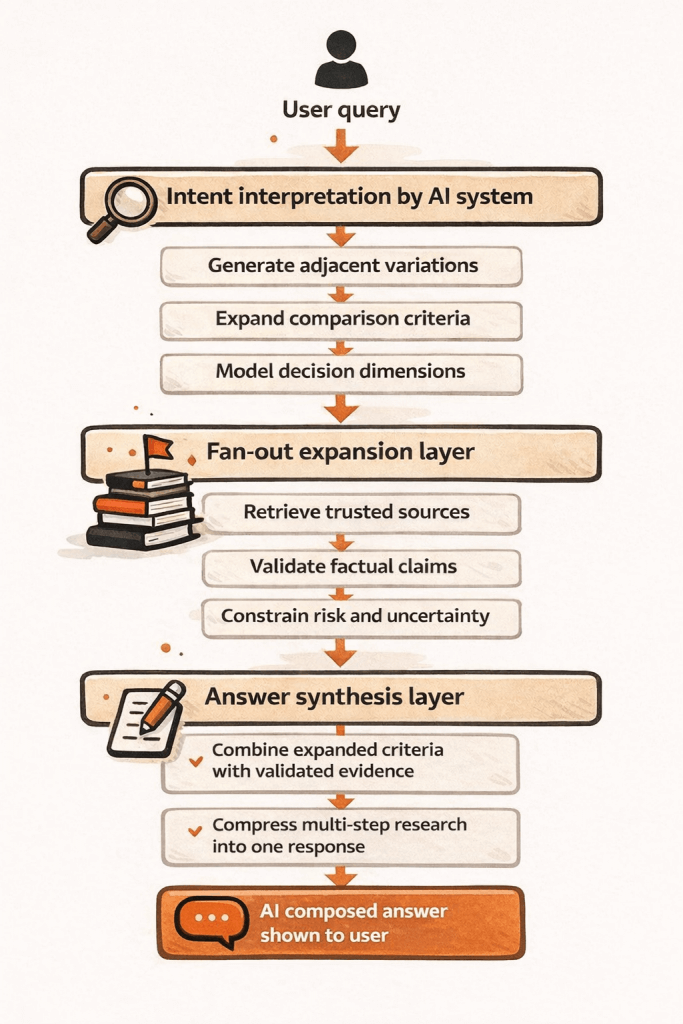

The difference between grounding and fan-out lies in the direction of movement within the AI system. Grounding moves inward toward verification and certainty by retrieving trusted sources that constrain what can be said. Fan-out moves outward toward exploration and comparison by generating and evaluating adjacent variations before an answer is assembled.

Grounding reduces risk and stabilises the informational base so that the response rests on defensible evidence, while fan-out increases breadth and models the surrounding decision landscape so that the response reflects multiple criteria and perspectives.

In practical terms, grounding determines whether you are credible enough to be included, and fan-out determines whether you are comprehensive enough to be considered during compressed research and comparison journeys.

The architectural difference

Grounding queries are stabilising mechanisms that act as retrieval steps anchoring an AI system’s response in verifiable and trusted material. So when a user asks a question, the model often performs internal retrieval to reduce uncertainty and align its output with reliable sources, effectively constraining the response within a safer and more defensible informational boundary.

Fan-out queries operate differently because they expand the search space before synthesis occurs, meaning that instead of validating a single line of reasoning the system explores multiple variations, sub-questions, and adjacent intents derived from the core query, increasing semantic breadth and allowing the model to construct a more comprehensive answer.

In simple structural terms, grounding narrows and stabilises the informational base while fan-out widens and explores the surrounding intent landscape. In AI-driven search experiences, both layers operate before a user sees a single composed answer. One layer determines what information is sufficiently trustworthy to include. The other determines how broad and comparative that answer should become.

Grounding queries in practice

Grounding queries exist to reduce hallucination and increase reliability, because they pull authoritative signals into the answer-generation process and constrain the model to sources it can confidently reuse, particularly in contexts where factual precision, commercial clarity, or regulatory alignment are required.

Travel grounding example

Consider a user comparing two destinations and asking which is better for a seven-day summer holiday with children: Spain or Greece. In a traditional search journey, that comparison might involve ten open tabs, multiple blog posts, airline sites, weather lookups, and cost calculators. In an AI-led interface, that research is compressed into a single composed answer.

Before producing that answer, the system retrieves tourism board guidance, safety information, climate data, transport infrastructure details, and structured comparison content from authoritative travel brands, and these retrieval steps function as grounding queries because the system is validating factual differences before collapsing them into one recommendation.

For travel brands operating in research and comparison spaces, grounding visibility now determines whether you are included in that compressed comparison layer. Structured destination versus destination pages, clear seasonal data, transparent pricing explanations, and well organised comparison tables are no longer supplementary assets. They become the raw material AI uses to eliminate the need for multiple clicks and extended browsing sessions.

Grounding in travel research therefore rewards structured comparison frameworks, measurable criteria, and credible trust signals, because these elements enable AI systems to replace entire research sessions with a single synthesised answer that users are willing to accept.

SaaS grounding example

A data leader evaluating vendors may ask whether a cloud data visualisation platform supports row-level security, SOC 2 compliance, private VPC deployment, granular governance controls, and integration with Snowflake or BigQuery. In response, the AI retrieves technical documentation, security whitepapers, trust centre pages, architecture guides, and API references from credible data visualisation vendors. It grounds its answer in material that signals product maturity, compliance readiness, and technical precision.

If a SaaS company building a BI or data visualisation platform produces detailed documentation, transparent security and compliance resources, clearly defined deployment models, and structured integration guides, it significantly increases the probability of being included in this grounding layer, whereas high-level marketing copy about dashboards and insights rarely survives the scrutiny of grounding because it lacks the explicit detail required for safe reuse.

Grounding visibility in SaaS is therefore built through structured product documentation, trust centres, architectural clarity, and integration specificity, rather than through headline keyword targeting alone.

Ecommerce grounding example

When a shopper asks which laptop offers better value for money between two specific models, the traditional journey involves specification comparisons, YouTube reviews, retailer tabs, and price checks. In an AI interface, that evaluation is compressed into a single answer. The system retrieves specification sheets, pricing data, warranty policies, performance benchmarks, and retailer comparison content before synthesising a recommendation.

These retrieval steps function as grounding queries because they ensure that the comparison rests on verifiable attributes rather than opinion.

Ecommerce brands that publish detailed specification tables, transparent pricing breakdowns, side-by-side comparison tools, and clear returns and warranty information increase their chance of inclusion in this compressed decision layer, whereas vague product descriptions or thin category copy rarely survive grounding scrutiny when AI is effectively replacing multi-step comparison behaviour.

Grounding in ecommerce research is therefore driven by structured product data, measurable attributes, and commercial transparency, because these are the elements that allow AI systems to confidently bypass the buyer journey without increasing perceived risk.

Fan-out queries in practice

Fan-out queries expand outward from a starting point and allow the AI system to explore adjacent intents before constructing an answer. This is where discovery logic and comparative expansion occur. It is also where AI begins to compress what used to be long research journeys into a single composed response.

Travel fan-out example

A user researching where to go in Europe for a short break may begin with a broad comparison query and trigger multiple internal expansions, branching into city breaks versus beach destinations, budget versus luxury experiences, flight duration constraints, school holiday timing, visa-free travel options, and climate considerations.

In a traditional environment these branches would generate additional searches and additional clicks, yet in an AI environment they are resolved internally before the answer is presented, meaning that fan-out expansions operate inside a compressed comparison journey.

If a travel brand has built layered content that maps destinations against traveller types, budget bands, trip length, seasonal constraints, and experiential themes, it increases the probability of appearing in the final synthesised answer, whereas brands that only target isolated destination pages without modelling real decision criteria risk being excluded from AI-led research journeys that no longer surface every intermediate query.

Fan-out in travel discovery therefore rewards brands that anticipate how travellers compare options, because AI now performs much of that comparison logic on the user’s behalf before a click ever occurs.

SaaS fan-out example

A query for the best data visualisation platform for enterprise teams may expand into variants involving embedded analytics, real-time dashboards, governance controls, integration with major data warehouses, pricing tiers, procurement constraints, and industry-specific use cases such as fintech or healthcare, with the AI exploring these adjacent criteria before synthesising a recommendation that reflects multiple dimensions of evaluation.

SaaS vendors that build deep content around deployment scenarios, integration ecosystems, governance features, performance benchmarks, and vertical applications are better positioned for fan-out visibility, whereas vendors relying solely on generic feature pages about charts and dashboards often fail to surface in expanded research journeys because they have not modelled the complexity of real buying decisions.

Fan-out visibility in SaaS therefore depends on modelling buyer complexity and mapping product capabilities to distinct operational contexts, so that AI systems can expand and evaluate without leaving your content ecosystem.

Ecommerce fan-out example

A shopper beginning with a comparison query such as “best mid-range noise cancelling headphones” may trigger expansions around battery life, comfort for long flights, compatibility with specific devices, brand reputation, durability, warranty length, and discount eligibility. In a legacy journey, each of these criteria would create additional queries and retailer visits. In an AI journey, the system evaluates these branches internally and presents a consolidated answer. Fan-out therefore operates inside a compressed evaluation funnel.

Retailers that structure content around comparison guides, buyer personas, price tiers, performance benchmarks, and scenario-based recommendations are more likely to surface in these expanded yet invisible research journeys, whereas sites that only optimise category pages around head terms without modelling evaluation criteria often miss this layer of visibility because AI resolves the comparison logic before a click ever occurs.

Fan-out in ecommerce research is therefore driven by mapping how buyers compare options across multiple criteria, because AI now executes much of that comparative reasoning on behalf of the user.

Why AI performance reporting changes optimisation priorities

With AI performance now being reported separately from traditional search metrics, brands can observe that impressions and citations do not always correlate with ranking positions. Some content performs strongly in grounding contexts yet weakly in exploratory ones. Other content appears in expanded journeys but fails to anchor authoritative responses.

This reveals a structural truth in which AI visibility operates across two invisible layers — the evidence layer and the expansion layer. The evidence layer determines whether your content is trusted enough to be reused. The expansion layer determines whether your content is broad enough to be discovered within compressed research journeys.

Optimising for one without the other creates imbalance, since a brand that only focuses on grounding becomes authoritative but narrow, while a brand that only focuses on fan-out becomes expansive but fragile in trust-sensitive contexts.

Strategic implications for travel, SaaS, and ecommerce

Travel brands must combine regulatory clarity with comparative depth and inspirational breadth, investing in structured factual resources for grounding while also building comprehensive itinerary variations and decision frameworks for fan-out, because discovery increasingly begins upstream in AI summaries long before users click into traditional comparison flows.

SaaS companies must invest in documentation, security transparency, deployment clarity, integration detail, and performance benchmarks to win grounding exposure, while simultaneously building industry-specific content, scenario-driven landing pages, and ecosystem narratives that capture fan-out visibility across complex buying journeys.

Ecommerce businesses must treat policies, specifications, benchmarks, and comparison tools as strategic assets rather than compliance afterthoughts, since these pages anchor grounding, while structured buyer guides, persona-led content, and scenario mapping drive fan-out discovery inside compressed evaluation funnels.

The mindset shift

Traditional SEO asked whether you ranked for a query. AI visibility asks whether you are selected during both validation and expansion. Grounding determines whether you are safe to trust. Fan-out determines whether you are sufficiently broad and contextually rich to matter within a compressed research journey.

In the wake of AI performance reporting, brands must design content systems that deliberately support both layers. Authority without breadth limits discovery and breadth without authority limits inclusion. Winning in AI environments therefore requires structural thinking that accounts not only for what users type but also for how AI systems interpret, expand, validate, and synthesise those queries before presenting a single composed answer.

Grounding builds credibility. Fan-out builds surface area. Sustainable AI visibility depends on deliberately engineering both into your content architecture rather than relying on traditional ranking logic alone.

Ready to speak to an AI search specialist about your AI visibility? Get in touch.