How Googlebot crawl behavior can reflect site health

Crawl frequency refers to how often a website is visited by a web crawler like Googlebot.

Its purpose is to visit websites, crawl them for new and updated information, and add it to Google’s database, known as the index. Nobody knows how much information is out there since search engine bots crawl and index only an estimated 40-70% of the web.

Googlebot crawl rate has a direct impact on how soon new or updated content appears in search engine results pages (SERPs). While crawl rate is not a ranking factor, it is nonetheless a key factor in ensuring that new and updated pages are discovered and indexed speedily, so Internet users can find them.

What is crawl frequency and what affects it?

Crawl frequency refers to how often Googlebot and other website crawlers like Bingbot, DuckDuckBot, and Baiduspider crawl a website. Considering the billions upon billions of webpages on the Internet, how do search engine crawlers, like Googlebot, decide which pages to index? For the sake of efficiency, several factors are prioritised when deciding which pages to crawl, prioritise, and index.

Key Factors That Influence Crawling and Indexing

Page Recognition

A webpage that is frequently cited by other webpages, signals authoritative content, something which is a stated Google priority. These pages are likely to be crawled often. Websites with engaging content that attracts many visitors indicate to web crawlers that they have content worth adding to the index.

Sitemaps and Internal Links

Googlebot uses sitemaps and follows internal links to discover and index web pages. A sitemap acts like a roadmap, laying out the website’s structure, where to find pages and files, and if they are related, making it easy for web crawlers to navigate.

An XML sitemap is a text file that facilitates website crawling and indexing, while internal linking shows crawlers where to go next. Websites with updated XML sitemaps and strategic internal linking provide the ideal digital destination for web crawlers to do their work.

Up-to-date, Relevant, and Useful Content

Googlebot prioritises dynamic websites that frequently publish new content and update existing pages regularly. Websites that don’t show signs of content changes are not prioritised for crawling. News sites and updated product pages are natural candidates for crawling.

Robots.txt

A robots.txt file contains instructions for web crawlers instructing them on which parts of a website they are allowed or not allowed to crawl. It can for instance include an instruction to disallow low-value or duplicate pages and focus on high-value content,

Always double-check that your robots.txt isn’t unintentionally blocking important resources or pages that should be crawled.

Mega Tags (Noindex tag)

Web crawlers can crawl pages with a noindex meta tag, but they will not include these pages in search results.

Page Load Speed

Fast-loading pages have a positive impact on crawl rate because they are easier and quicker for crawlers to process. On the other hand, slow-loading websites impact search engine crawlers by not allowing enough time to access all the pages, resulting in incomplete indexing.

Duplicate Content and Low-Value Pages

Pages with errors (like 404s or long redirects), duplicate pages, and pages with insufficient content affect crawl rate negatively. Googlebot and other web crawlers will deprioritise or entirely skip these pages.

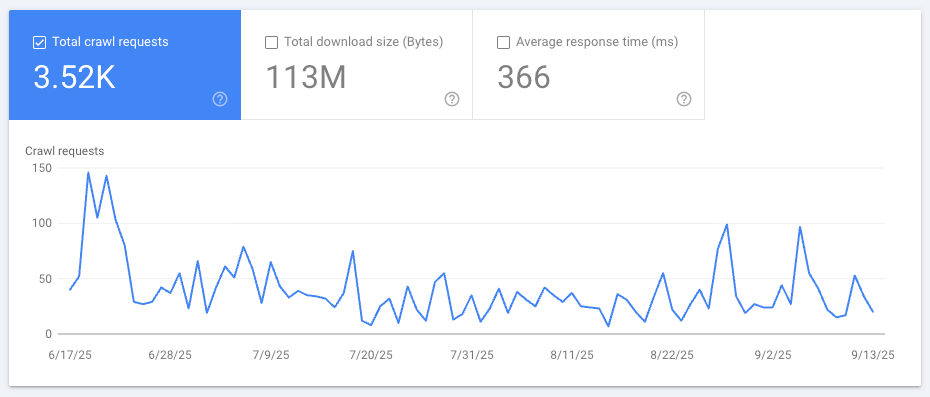

Measuring crawl frequency using log files

Log files are records of every request made to a website. Among other information, log files customarily contain the following data:

- A visitor’s IP address

- A timestamp of the visit

- The URL requested

- The HTTP status code (like 200 OK, 404 Not Found)

- The user agent (whether human or search engine bot)

In terms of measuring crawl frequency, these data points reveal:

- When and how often crawlers access a site.

- Which pages are crawled most, giving a hint as to which pages are favoured by crawlers.

- If crawlers regularly scan the most important web pages (like the homepage, category pages, or frequently updated content).

- Wasted crawl budget. Crawl budget is mostly applicable to large sites and refers to the number of pages a digital spider can explore within a specific time slot. Spending time on low-value pages like filters, tags, or duplicate URLs wastes crawl budget.

- Errors like 404s or redirects, which can also waste crawl budget and slow down crawling.

- Which bots have been visiting the site, and how often.

This data is vital for identifying crawl issues, and is an indication of what can be done to address them.

What crawl frequency tells you about site health

Crawl frequency, or the lack thereof, is a key indicator of how Googlebot and other web crawlers understand and appraise a website.

The various crawl rates and what they indicate

High Crawl Frequency

When Googlebot and other crawlers frequently access a website, it signals that the site is technically sound; search engines can easily discover new content and track changes, which can support faster indexing and better search visibility.

When search engines frequently crawl key pages, such as revenue-driving content or regularly updated blog posts, it indicates they notice and prioritise fresh and value-driven content.

Consistent Crawling of Core Pages

Consistent crawling of core pages like homepages, category pages, and top-level directories indicates that search engines value these assets.

Balanced, distributed crawling across the website

When crawlers spend more time on important sections and less time on less important sections, it’s a logical outcome of a site with a well-structured architecture that clearly indicates which pages are a priority. It also indicates that internal linking is functioning properly.

Quick Indexing of New Content

When newly published content appears in search results shortly after release, it indicates the crawlers noticed it instantly, a sure sign of a healthy crawl rate.

Consistent crawl rates on irrelevant URLs

Crawlers wasting time on irrelevant URLs are also wasting crawl budget because they’re not getting around to crawling valuable content.

Low crawl activity on key pages

When important pages receive little crawl attention, it may be because search engines struggle to find pages due to poor internal linking or incomplete XML sitemaps.

Low crawl rate in general

If Googlebot is ignoring your site, your site may lack original content, your content is duplicated or mirror other web pages too closely.

A sudden spike or drop in crawl rates

Sometimes a site update leads to a sudden spike in crawl rate or a sudden dip. A sharp decline can result from technical issues, such as broken links or server errors. Sharp, unexplained crawl spikes may also point to site issues.

Crawl frequency: best practices and quick fixes

By now you must be wondering how to get Google to crawl your site. Fortunately, attracting frequent bot visits is not rocket science – there are clear steps you can take that will make your site appealing to digital spiders.

How to increase Google crawl rate

Develop a site that Googlebot (and other search engines) see as valuable

Google, and therefore Googlebot, is prioritising E-E-A-T, which stands for Experience, Expertise, Authoritativeness, and Trustworthiness. Sites that display these qualities will experience high crawl rates.

Update content regularly

Google prioritises fresh content, so when you update your content often, Googlebot is more likely to notice it and visit your site regularly. The search engine recognises that the site is active and may visit it more frequently to keep its index up to date.

Let Google know that you have updated your site by requesting indexing in Google Search Console.

Submit a Sitemap

A sitemap acts as a roadmap for web crawlers, showing them how to get to all your website pages. Submit your sitemap through Google Search Console and Bing Webmaster. Keep the sitemap up to date, continuously adding pages.

Request Indexing via Google Search Console

As soon as you have published new content or updated a page, request indexing through the URL Inspection Tool in Google Search Console. This will signal Googlebot to crawl the page and might even prompt it to act soon.

Pay attention to internal links

Strategic internal linking makes it easy for crawlers to navigate your site. Create a logical site structure using descriptive anchor text and linking to relevant content. Monitor for duplicate content linked to different URLs; it’s a waste of time for crawlers.

Pay attention to site speed

Fast-loading pages make crawling easier and more efficient. It’s essential to ensure optimal sight speed, as web crawlers operate on a limited budget. If a crawler spends too much time on images that are not optimised, it may not have sufficient time to explore the accompanying text.

Addressing Crawl Issues

Sometimes a site is crawled too often, or not often enough. In both cases, there are steps you can take to manage the issue.

Addressing Over-Crawled Pages

Edit the robots.txt file

When Googlebot spends too much time on low-value pages like login pages or terms and conditions, instead of high-value content, such as the home page or blog content, the crawl budget is being misappropriated. Since the robots.txt file instructs web crawlers on which pages to access and index, edit it so search engine bots are directed to relevant pages. Implement a “disallow” directive that prevents them from crawling irrelevant pages.

Implement Canonical Tags

When a website has multiple versions of the same content, web crawlers may crawl and index them all separately, wasting crawl budget. Implement canonical tag for parameterised URLs (e.g., ?sort=price) or printer-friendly pages, pointing them to the main, canonical version to avoid duplicate crawling.

Apply Noindex Tags

Applying noindex tags allows web crawlers to crawl a page, but not to index it. Use it for pages you don’t want to appear in search results, such as login pages, admin pages, duplicate content pages, or pages.

Addressing Under-Crawled Pages

Knowing how to increase Google crawl rate is essential to ensure new content gets indexed quickly. The following steps can encourage more frequent visits by web crawlers.

Strengthen Internal Links

Optimal website architecture that’s easy for search engines to navigate allows for clear internal links between new and priority content and the homepage, the navigation bar, and other high-authority.

Audit Server Response and Error Codes

Regularly check for 4xx/5xx errors using Search Console or log files, fix any broken links, and aim to keep response times low for a smoother crawl.

Keep an Updated Sitemap

The XML sitemap must include all new, modified, or high-value pages. Submit the updated sitemap through Google Search Console as soon as changes are made.

Avoid Duplicate or Thin Content

Duplicate and thin content pages waste crawl times. Consolidating thin pages and eliminating duplicates will boost crawl efficiency.

Key takeaways

Crawl frequency is a vital indicator of a website’s technical health and search accessibility. Log file data offers clear insights into which pages Googlebot crawled, how often, and if any crawl issues were encountered.

A well-distributed Google crawl rate indicates a site with a clear internal architecture that facilitates efficient crawling, while sudden crawl drops and spikes may signal crawl budget waste due to issues such as poor internal structure, broken links, or duplicate pages.

Log files provide a wealth of information about crawl frequency and potential issues that may be negatively affecting it. Taking steps like optimising internal linking, maintaining a clean site architecture, and updating content regularly can encourage more efficient crawling and indexing.

Ultimately, an optimal Google crawl rate is vital for effective SEO, ensuring timely indexing and a dominant ranking position.