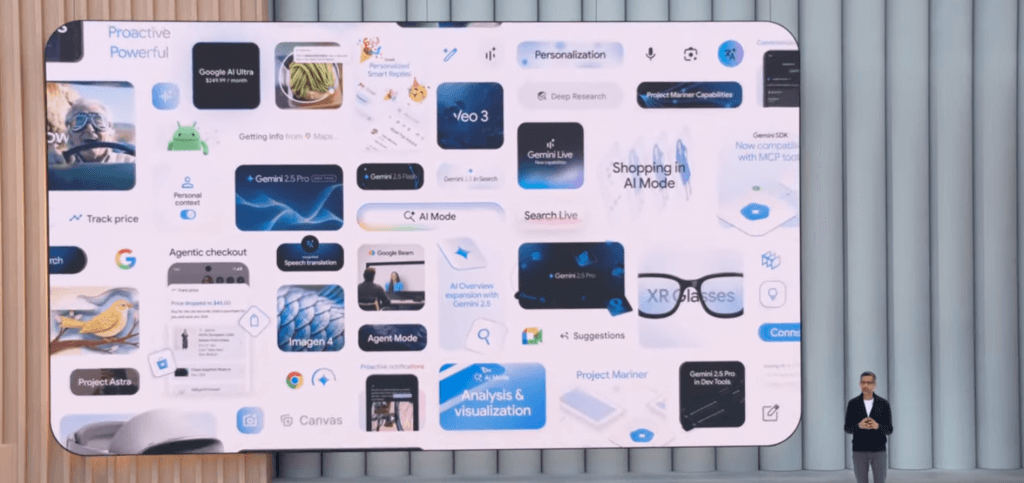

The future of Google is AI-driven and Agentic

It wasn’t a hard call to guess the main topic of Google I/O 2025. Given Google’s need to hold a completely separate event for all of its Android news, I expected the I/O Developer conference to be AI-heavy, and it was.

While Gemini 2.5 and AI Mode are the headline takeaways, the focus for me is on four areas:

- Ecosystem – The release of AI Mode and increasing accessibility to Gemini across multiple devices, including XR, feels as though they are working to keep users in the Google ecosystem and fend off potential competitors. It’s the likes of Meta, X/Grok, and LLMs they’re worried about enticing users away..

- “Hyper relevant” – The presentations repeated that AI Mode would search the internet to find the most relevant results. The focus felt like they were hinting towards what Hidden Gems update was meant to be.

- Agentic – Google’s vision is to act independently and remove the need to “search”. Businesses need to be ready to deal with agent queries and understand how to be visible in an agentic world.

- Personal context – While AI Mode is big, let’s not understate that when Google rolls out “personal context” later in 2025, true levels of personalisation will come with it. One person’s SERP will differ from another, meaning focused keyword rankings are becoming even less relevant than they are now.

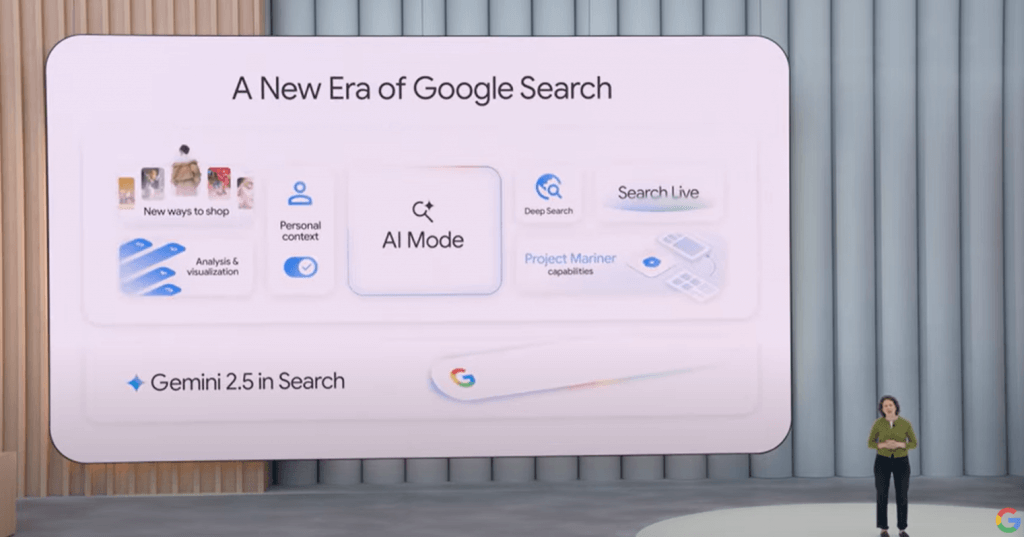

Gemini 2.5

Google is baking a custom version of Gemini 2.5 directly into AI Mode and AI Overviews.

This makes responses faster, more accurate, and context-aware, with one consistent model powering different user experiences.

That consistency is key.

It’s not just about improving Search, it’s about unifying how AI operates across Google’s ecosystem, giving users a consistent experience. This is a huge leap forward for Google.

AI Mode

At Google I/O 2025, the debut of AI Mode in Google Search marked a turning point, offering a clearer look at how Google is integrating large language models directly into the core of its Search experience.

Beginning in the US, AI Mode is starting to roll out. While AI Mode will impact and further evolve the Search ecosystem, “Personal Context” will be the gamechanger.

Users will start seeing a new “AI Mode” tab appear in Search and the Google app in the US, with a broader rollout to follow. What makes it especially interesting is how it works and what it suggests about the future of Search.

AI Mode will also serve as a testbed for new ideas. Google framed it as the place where experimental features appear first.

Query “Fan-Out”

At the heart of AI Mode is something called “query fan-out.”

This means Google breaks your query into multiple sub-questions and runs them in parallel. This lets it uncover more relevant, and sometimes surprising information. It’s a big deal because it represents an open acknowledgment from Google the traditional, single-query search engine results page (SERP) can only go so far.

With fan-out, Search becomes more of an orchestrator, digging deeper, connecting dots, and synthesising insights in ways users typically wouldn’t manage independently. This includes focusing on your content chunks rather than the whole page.

It also shifts the delivery method of results, from shoehorning everything into one page – the SERP – and moves toward layered, multi-step answers.

Personal Context

For those who opt in, AI Mode can use personal context to make recommendations more relevant, using things like past searches or data from connected apps like Gmail.

For example, it might suggest restaurants with outdoor seating because you’ve booked them in the past. It’s undeniably powerful but opens the door to fundamental questions about privacy, data use, and trust. Google insists this is all opt-in, but the broader take-away is clear.

Search is becoming more customised and less consistent from person to person.

What stood out most to me? When Google mentioned “personal context” on stage, it became apparent Search and SEO are no longer what they once were.

The idea that results can differ based on what’s in your Gmail inbox, not just your queries or browsing history, is a seismic shift. It signals the end of a one-size-fits-all Search and the start of a deeply personalised, AI-driven experience.

Agentic Search – Project Mariner

Thanks to integrations from Project Mariner, Google has also started to bring agent-like features into Search. These aren’t just conveniences; they’re steps toward AI that acts on your behalf.

Think ticket purchases, restaurant bookings, or appointment scheduling, that are handled directly through Search using integrations with services like Ticketmaster, Resy, and Vagaro. The use cases are narrow for now, but it’s easy to imagine how this expands over time. Search is evolving into a personal assistant that gets things done.

AI Mode Shopping

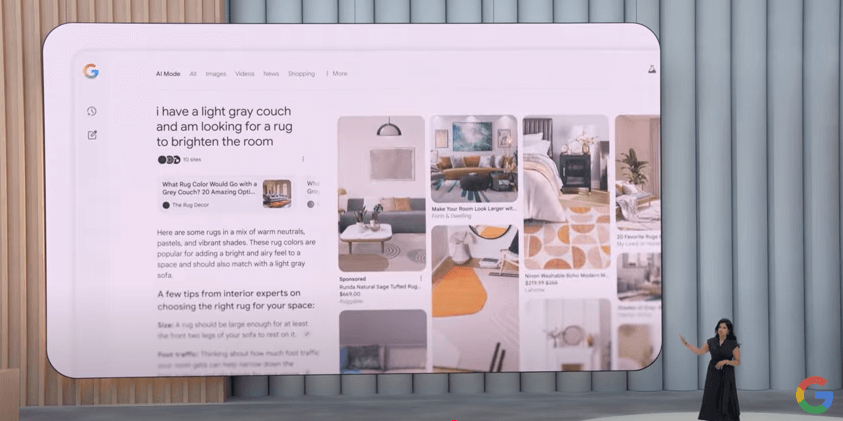

AI Mode also makes shopping more thoughtful and personal. By combining Gemini with Google’s Shopping Graph, shopping Search not only shows results but also helps users refine and explore choices, track prices, and even make purchases directly.

Google has also introduced a Pinterest style “mood board” as part of AI Mode to help users develop queries and gain “inspiration”. My initial thoughts are this is close to the AI product image generation tool they had in beta testing. It allowed users to generate AI images of products, then they could use Lens to find if text searches weren’t cutting it. – Secondly, in the example shown by Google’s VP/GM, Advertising & Commerce Vidhya Srinivasan, it contains sponsored results.

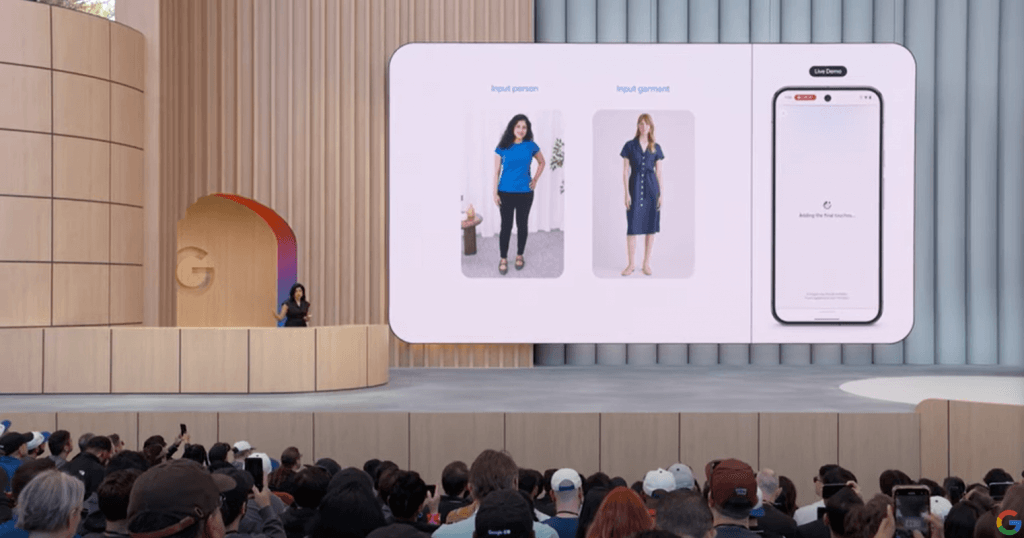

Google also launched virtual try-on (VTO). First shown in 2023, the version just released allows you to upload your own images alongside the garment image. he AI then shows you how the garment would ‘fit’.

What has changed and what still matters

For a number of years Google features have become an intermediary between the business and the consumer. These new developments cement this further.

We’re entering a world where there isn’t a single version of Google Search. There’s traditional Search, and then there is AI Mode. Each has its own logic system and will have different adoption rates across different audiences and sectors, as well as different stages of the funnel and query types.

This means our role as SEO is changing. We need to adapt and report on different metrics and KPIs for the two types of Search.

Understanding how AI reads, interprets, and reshapes content and how these strategies can work with traditional SEO strategies is key. Success depends on keeping pace with a system that indexes the web and rethinks how it’s presented, interacted with, and acted upon.

The three pillars of Technical SEO, Content, and Brand haven’t necessarily changed. However, the application of mindset and how we define success have made a huge shift. It’s now on us to embrace a new way of working.

Worried about what AI Mode means for your brand’s visibility?

Whether you’re exploring the risks or looking for strategic opportunities, we help marketing leaders make sense of the shifting landscape and stay ahead of it. Let’s talk.