How to recreate aspects of Google Search Console in Excel using COUNTIFS statements

Google Search Console (GSC) is very powerful software with many a useful tool, and there’s no way I could really recreate any element of it better myself. However, using Excel and some nifty formulas we can create a very reasonable emulation which gives us access to some added data that GSC doesn’t supply.

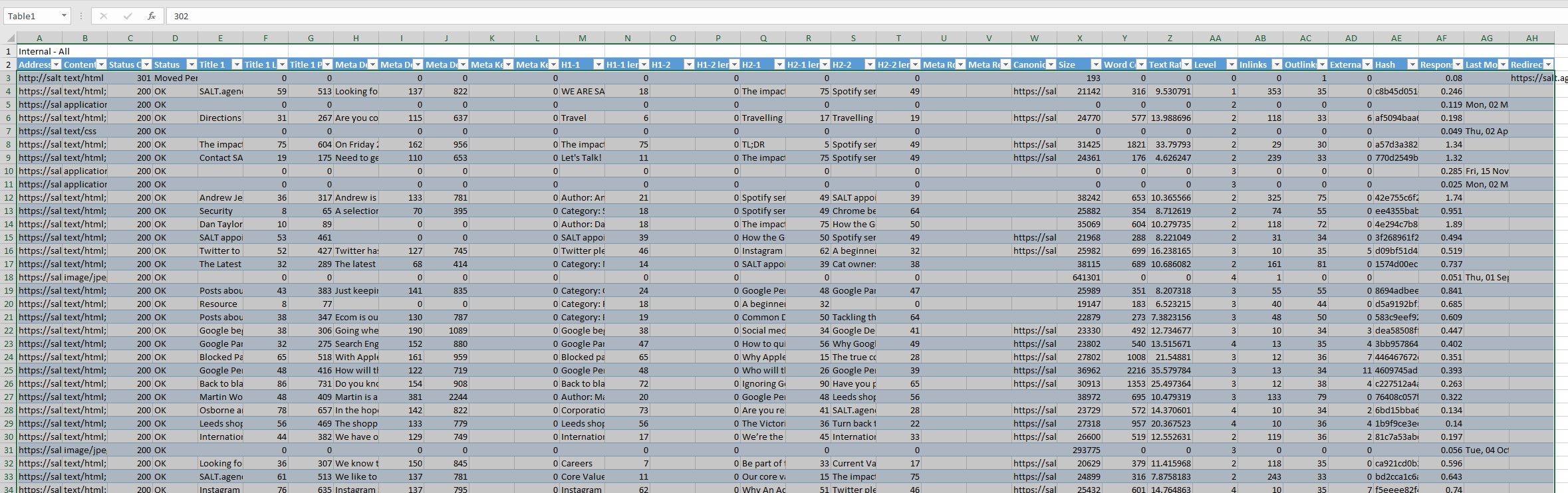

For instance, take the HTML improvement section, it accurately lists and tells you where these improvements are, but what if you wanted know about H1s, H2s or H3s? Or which pages have missing meta descriptions? You can find out all of these with relative ease and all you’ll need is crawl software and a reasonable knowledge of COUNTIFS formulas.

Preparation

Firstly, you’ll need to crawl the website, I strongly recommend using Screaming Frog (there are other tools available such as OnCrawl, but I think Screaming Frog is the best), with the free version you are allocated 500 URLs per crawl, if you need to crawl more URLs than this, then it’s £150 for a yearly licence.

The default spider configuration is fine for the purposes of this guide, I’d only alter it if you have reason to and know what you’re doing. Once the crawl is finished, save it, export it, open it up in Excel and format it to a table; this is the data we’ll be manipulating to recreate answers that GSC gives.

The formula breakdown

To accurately construct this formula we need to think like Googlebot does, and take in all the factors that Googlebot would have to consider when crawling a site, so if Googlebot was looking for missing meta descriptions, how would it do this?

Firstly, the page would have to be accessible, that is not blocked in robots.txt or more importantly (in this case) blocked in meta robots, then beyond that the page type would need to be text/HTML as nothing else can contain a meta description. Finally, the meta description would have be blank (of course); we can create a formula that will do this which will count 1 when all these conditions are met:

=SUM(COUNTIFS(Table1[[#All],[Content]],"text/html; charset=UTF-8",Table1[[#All],[Meta Robots 1]],{“noodp, noydir",””},Table1[[#All],[Meta Description 1]],""))

Content Type

The most popular character encoding in 2016 is, by far, ‘text/html; charset=UTF-8’, with 87.8% of websites using it, but sometimes you’ll encounter websites with different pages in different character encodings, for example ‘ISO-8859-1’, or sometimes people don’t even add an encoding type (which should be raised straight away).

The point is there is a lot of criteria that will vary from website to website so it’s important to familiarise yourself with the website first so that you can write accurate formulas to extract the data you want. A lot of times you’ll find that you need to use an {OR} statement, for example if the website has multiple character encoding types:

Table1[[#All],[Content]],{“text/html; charset=UTF-8”,” text/html; charset=UTF-8=ISO-8859-1”},

Meta Robots

I am counting pages that either have no meta robots (“”) or a no-directory directive (“noodp, noydir”) because those are the robots on the website I’m working on.

However, other websites may have different tags such as “index”, “all”, ”index, follow” etc. These mean the same thing for us: these pages are indexable, therefore we want to count them. You should familiarise yourself with what type of robots you’re working with as soon as you export the crawl to make the counting easier, like I mentioned earlier about the Content Type.

It should be noted that it’s not possible to include all variations of all indexable meta robots in the {OR} brackets in the formula, for example:

{“noodp”,”nooydir”,”noodp, nooydir”, “index”, “index, follow”, “all”, “noodp, nooydir, index”, “noodp, follow”, “nooydir, follow”} … and so on.

In short, this is due to the way Excel sees arrays, the more OR arguments you add, the more complex the formula gets (and is more likely to break), so we need to spend that extra little bit of time customising the formula to suit our own meta robots.

Using the formula

Now that you know what to look out for and how the formula works, let’s take a look at some of the ways in which we can use it to benefit SEO:

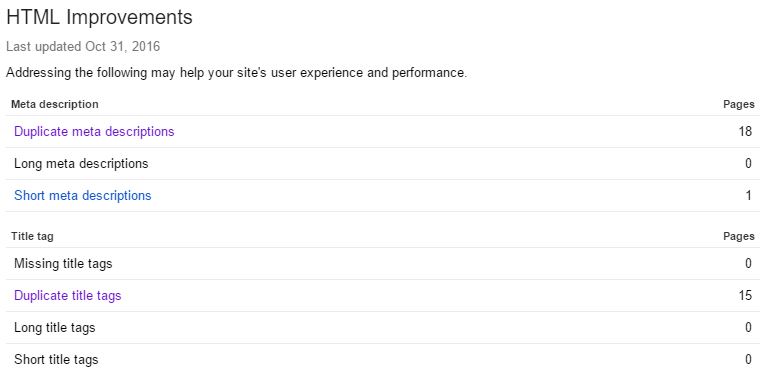

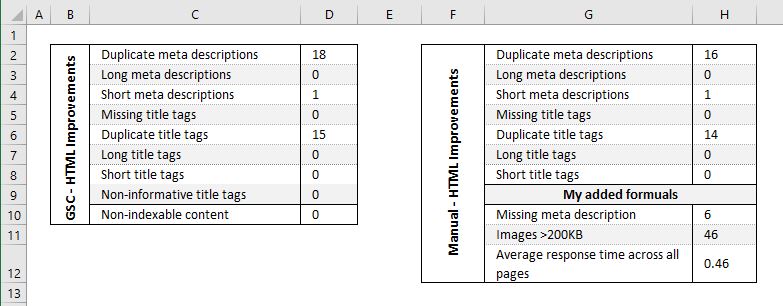

Imitating Google Search Console

When I first started experimenting with these formulas, my original idea was to test how accurate GSC was, so let’s do this again and create our own count of all the HTML Improvements in GSC, duplicated meta titles, short meta descriptions etc. But first we need to add two more columns to our manual crawl to count the duplicate titles/descriptions (otherwise the formula would become ridiculously long and confusing).

This formula will count duplicated meta titles that are not blocked in meta robots:

=SUM(COUNTIFS(Table1[[#All],[Title]],[@Title],Table1[[#All],[Meta Robots 1]],""),(COUNTIFS(Table1[[#All],[Title]],[@Title],Table1[[#All],[Meta Robots 1]],"noodp, noydir")))

You can use this same formula to count duplicated meta descriptions, just replace ‘Title’ with ‘Meta Description 1’ (or whatever your column is called).

Now that you have an extra two columns showing all duplicated titles/descriptions, we can easily perform formulas on them.

Duplicate meta titles

=SUM(COUNTIFS(Table1[[#All],[Content]],"text/html; charset=UTF-8",Table1[[#All],[dupe titles]],">1",Table1[[#All],[Meta Robots 1]],{"noodp","noydir","","noodp, noydir","index,follow","all"}))

Missing meta titles

=SUM(COUNTIFS(Table1[[#All],[Content]],"text/html; charset=UTF-8",Table1[[#All],[Meta Robots 1]],{"noodp, noydir",""},Table1[[#All],[Title]],"",Table1[[#All],[Status Code]],"200"))

Meta title > 56

=SUM(COUNTIFS(Table1[[#All],[Content]],"text/html; charset=UTF-8",Table1[[#All],[Title Len.]],">56",Table1[[#All],[Meta Robots 1]],{"noodp","noydir","","noodp, noydir","index,follow","all"}))

Meta title < 40

=SUM(COUNTIFS(Table1[[#All],[Content]],"text/html; charset=UTF-8",Table1[[#All],[Title Len.]],"<40",Table1[[#All],[Meta Robots 1]],{"noodp","noydir","","noodp, noydir","index,follow","all"},Table1[[#All],[Status Code]],"200"))

And for the meta descriptions, just like I mentioned above, replace ‘Title’ with ‘Meta Description 1’.

New HTML Improvements

The below are just a few ways you can use variations of the formula to find out extra information that isn’t in GSC:

Missing H1 tags:

=COUNTIFS(Table1[[#All],[Content]],"text/html; charset=UTF-8",Table9[[#All],[Meta Robots 1]],{"noodp, noydir",””},Table9[[#All],[H1-1]],"")

Missing Meta Descriptions:

=SUM(COUNTIFS(Table1[[#All],[Content]],"text/html; charset=UTF-8",Table1[[#All],[Meta Robots 1]],{“noodp, noydir",””},Table1[[#All],[Meta Description 1]],"",Table1[[#All],[Status Code]],"200"))

Multiple Meta Titles:

=SUM(COUNTIFS(Table1[[#All],[Content]],"text/html; charset=UTF-8",Table1[[#All],[Title 2]],"*",Table1[[#All],[Meta Robots 1]],{"","noodp, noydir"},Table1[[#All],[Status Code]],"200"))

Note: This formula will only work if you actually have multiple titles. I’d recommend scanning your headers looking for a ‘Title 2’ column first before using this formula, most of the time sites don’t have multiple titles.

Identifying large images

Still using a variation of the same formula, you can highlight the number of images that are over 200KB (or whatever size is too big for the site you’re currently working on).

Obviously, unnecessarily big and uncompressed images are in no way optimal for site performance, and this is usually one of the main reasons why a site is loading slowly.

Screaming Frog already has a feature similar to this, it will tell you the images over 100KB (which is the majority these days, even compressed) so it’s useful to be able to alter this size variable:

=COUNTIFS(Table1[[#All],[Content]],{"image/jpeg",”image/png”,image/gif”},Table9[[#All],[Size]],">200000")

I am counting three different types of image here: JPEG, PNG and GIFs, the site you are working on may have more or less.

An image size of 100000 in Screaming Frog is equal to 100KB.

Average response time

Response time is not to be confused with load time. The response time is how long it takes for your browser to hear back from the server, whereas the load time is the total amount of time it takes to load the page and receive all the resources. Nonetheless, this can still be useful at diagnosing the cause slow websites, whether it’s the server, the content, the code or something else.

=AVERAGEIFS(Table1[[#All],[Response Time]];,Table1[[#All],[Content]],"text/html; charset=UTF-8",Table1[[#All],[Meta Robots 1]],{"noodp, noydir",""})

The AVERAGEIFS formula works in a very similar way to the COUNTIFS, the only difference is you select the array of cells you wish to average at the start of the formula, and then you continue to select the other criteria the cell must meet to be included in the average.

Comparing results to GSC

So now we have quite a bit of our own data let’s compare it against the data in GSC to see how similar it is.

You’ll see that the results are almost exactly the same as each other, only minor discrepancies which are due to the fact that Google Search Console does not update in real time, so in theory if you were to not touch the website in a week and compare the results again, they should identical.

But for now, I am happy with the result, it proves that GSC is accurate and our own queries are too.

Conclusion

Although not groundbreaking, and although there are probably already tools available on the internet that will do a similar thing as what’s been accomplished here, in my opinion it’s better to do things yourself, you are left with a much better understanding of how things are working most of the time, much more accurate data.

From here you can start monitoring these issues more closely and keep track of improvements, or use it to spot potentially harmful trends developing over time.