Avoiding Digital Gluttony: Optimizing Website Performance & Loadout Speed

As web technologies evolve and an ever-increasing number of tools, frameworks, and libraries become available, so too does the number of poor performing websites.

With the implementation of animations, images, JavaScript, and reams of CSS, we often forget to consider the impact that these things have on the SEO of our sites.

While these things do have the potential to add a lot of value, their mismanagement, overuse, and poor implementation is where the problems start to arise.

Today, developers are spoilt for choice, especially when you consider options such as NPM, a registry where developers can create, access, and download over 40,000 JavaScript tools and packages.

We can pick any library, framework, or theme from a dizzying number of sources and throw it into a project with little thought for the maintenance, optimization, security, or accessibility.

As the days of simple HTML, CSS, and minimal JS websites are now behind us, and the technologies we use continue to evolve, we must be aware of the need to balance technical SEO and page speed with the latest frameworks and libraries.

Think critically and audit your sites

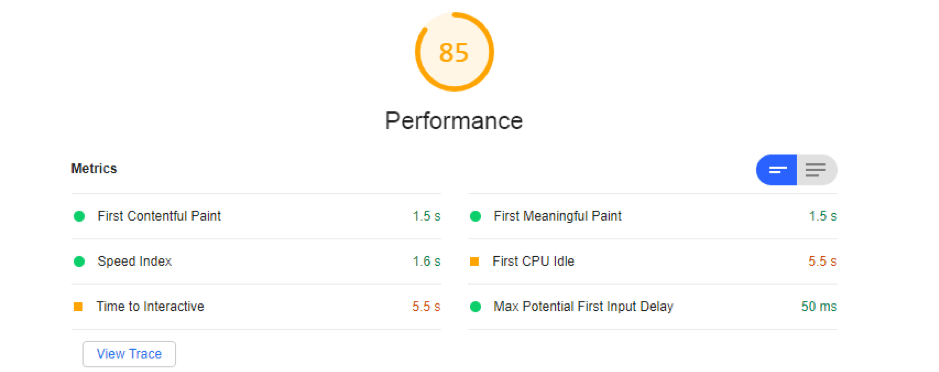

With that in mind, here are suggestions for improving the performance, usability, and SEO aspects of your site. Before making any changes, I’d recommend starting with a Lighthouse audit for an overview of the areas that you need to adjust.

Its detailed suggestions are great for highlighting quick wins.

What’s more, it provides extra information and links to documentation, should you be unsure about the improvements it advises.

You can find the audit panel inside the Google Chrome dev tools and here’s how it looks:

This is a good starting point to identify any ‘obvious’ issues such as large images, render-blocking resources and more.

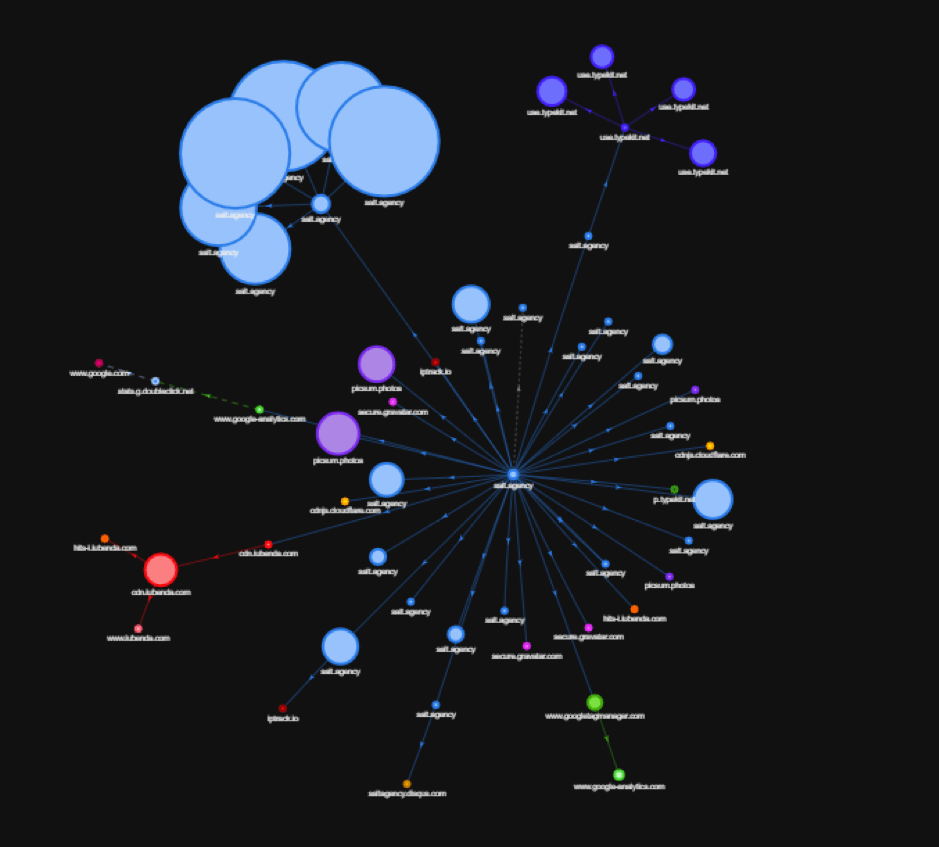

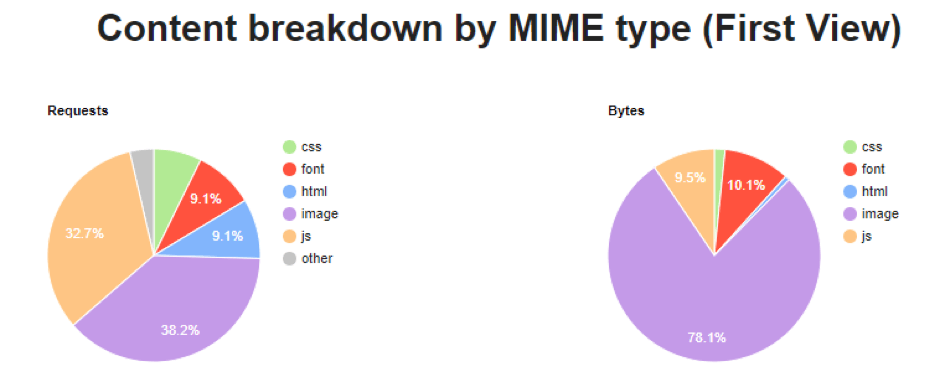

Another great tool available, webpagetest.org is one I highly recommend. It does an excellent job at granulating every request a web page has to make and provides an awesome alternative to the to traditional network waterfalls:

There are plenty more tools out there that allow you to analyze each individual request so you can drill down further, however, most of these only points out issues, and offer no ‘real’ explanation.

Therefore, we’ll take a deeper look into some of these problematic areas these tools are highlighting, and break down why they are an issue to site speed and have a direct impact on SEO.

Thinking about HTML

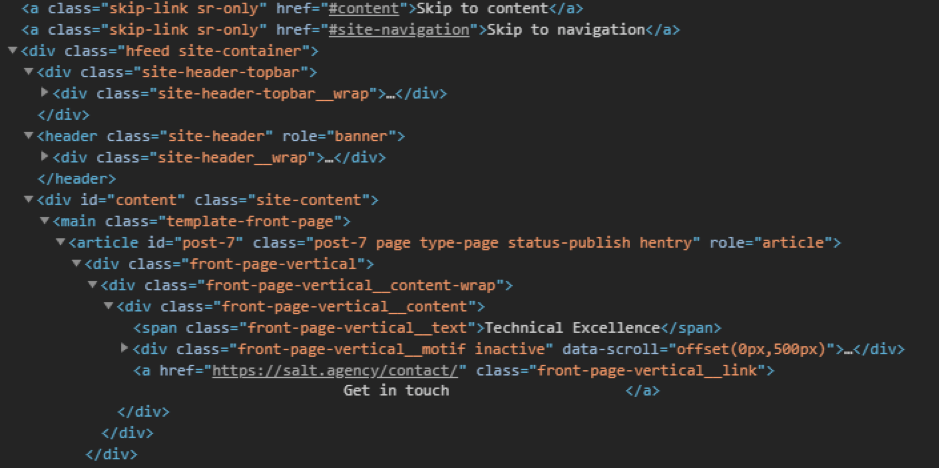

As the browser receives your site, it will tokenize your HTML and begin to construct the Document Object Model (DOM).

This is an intense process; it is crucial that you have all important resources optimized and in the correct loading order to maximize speed and user experience.

If you want some real-world examples of how speed directly correlates to organic rankings, conversion rate, and so on, I highly recommend you visit WPO, they have an excellent list of case studies.

Common code bloating HTML mistakes

Google recommends that your DOM on any given page has:- Maximum of 1,500 nodes

- Maximum depth of 32 nodes

- No parent node with more than 60 child nodes

Ensure that your mark-up doesn’t contain any unused or empty elements. This is because the browser has to read the HTML, tokenize the element, add it to the DOM, possibly style it with CSS, and then render it. We want to make this process as painless as possible for Googlebot.

A pet peeve of mine is the use of empty nodes as ‘spacers’ — this is unnecessary, as a CSS spacer class with the relevant margin properties can be used, negating the need for wasting resources on empty tags. Empty nodes add unnecessary code bloat which incrementally damages your website’s speed.

Tag relevance is also essential here. Using the correct semantic HTML is best practice and can also improve your site’s overall accessibility, which adds to the wider scope of SEO.

Thinking about CSS

Once the browser has found your stylesheet link in the HTML, it will go off and fetch the file.

It is during the time that render-blocking can occur — this means the rest of your site’s loading will be put on hold while the browser deals with the CSS.

The larger your CSS files are, the longer render-blocking goes on and the slower your site becomes.

With this in mind, it is vital that your CSS is written correctly and minified so that users landing on your website do not see a blank page for 5 seconds (they will likely bounce)

Unfortunately, clean, structured CSS seems to get overlooked quite frequently, so I’d highly recommend taking the time to address this issue.

Ensure your styles are relevant, appropriate and not duplicated. Additionally, create classes to handle styling that is used in multiple places and keep your code DRY (don’t repeat yourself).

Split your styles out into separate files and only use what is needed. A common mistake we see is pages having to load CSS that is not needed for that page – only load CSS that is relevant to that page.

If you’re using tools such as Webpack, make sure it can bundle your CSS/SCSS correctly and avoid using element selectors.

Element selectors slow things down unnecessarily as they require a browser to look for all of the correct elements in the CSS Object Model (CSSOM) before it can apply the styles. These are more incremental improvements that can be done to increase site speed performance.

The problem becomes even more pronounced if you target elements inside other elements, as the browser will have to look for your target and then walk back up the CSSOM tree to check that it exists inside of the other element.

Thinking about JavaScript

One of the biggest offenders for poor performance is JavaScript.

Thankfully, many strategies can be employed to deal with this.

As there are so many examples, we can’t go into full detail on each one here, so I’ll provide a brief overview of common themes.

Is the JavaScript necessary for the page?

Do you need to import an entire library/package/module to do something as trivial as say, animating a single element on one page? This could quite easily be done with vanilla JS/CSS.

In fact, most things can be done in vanilla JS and compilers such as BabelJs allow us to support older browsers using newer features.

You can also use tools, such as BundlePhobia, to give you an idea of how big an NPM package is before you decide if you want to use it or not — it can make for sobering reading!

In other words, the vast amount of JavaScript libraries out there are intriguing to any developer, but the effect this will have on website speed and SEO must be taken into consideration.

Is your JavaScript DRY?

Much like the CSS tips above, having correct and tidy JavaSript can do a world of good on its own. Remove unused code, unnecessary comments, and tidy up where possible.

Try to implement the Keep it Simple, Stupid (KISS) principle where possible.

Is your JavaScript bundled?

Are you using a bundling tool such as Webpack or Parcel to help you in bundling your modules? Ensure you’re separating code out and only use what you need when you need it.

Is your JavaScript minified?

Minification is the process of removing certain aspects of code that can make the file unnecessarily large. This can include characters such as semicolons, spacing, new lines, while keeping the original functionality of the code intact.

Webpack has the minimize option that will help in reducing the overall size of your bundle. This is set to true by default when running in production mode and works in the same way as discussed in the CSS tips.

The less time the browser, or Googlebot, must spend parsing through useless code, the faster your website will be.

Optimize your images

It’s generally accepted in the SEO community that images should be no larger than 200kb, and it has been that way for years.

There is a myriad of image optimization tools available. TinyJPG, for example, is a personal favorite for image compression.

It is a manual tool, but other tools do exist for automating the process should you need them.

If you do use any icons, make sure they’re SVGs and added to a sprite sheet for even more performance improvements.

In conclusion

While it is easy to get sucked into the vortex of excess, it’s never too late to make a difference. With careful planning, you can even avoid these issues, to begin with — they do say that prevention is better than the cure.

It’s worth mentioning that this list is not exhaustive and that site/page speed is made up of more than just a handful of areas, everything from servers to desktop or mobile browsing and certain platforms can have an impact and it’s always worth exploring all of these if you’re struggling to improve your speed.