An guide to log file analysis and Googlebot access

If your server logs have always seemed like a bottomless silo of indecipherable data that just gets bigger and bigger, you could be missing out on crucial technical SEO value.

This guide will help you to understand the value of log file analysis for SEO and how to use it to identify opportunities for web marketing campaigns and search engine marketing.

What is a log file?

A server log file (often shortened to just “log file” or “server logs”) contains a record of all requests made to your website’s hosting server for a certain time period.

This includes requests from traffic such as humans and search engine bots. Each line in a log file represents an individual request.

Although data in server logs is relatively anonymous, it includes certain identifying elements.

These include the originating IP address, the page or content requested, the date and time of request, and a ‘user-agent’ field, which can identify whether the request is coming from a browser or a bot.

Be cautious not to rely too strongly on the user-agent field however, since user agents can be spoofed.

How to quickly verify Googlebot in your server log files

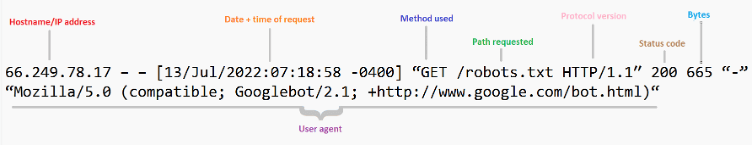

As mentioned above, a line in server logs contains several separate bits of information. Let’s break that down.

Take, for example, the following server log line:

66.249.78.17 – – [13/Jul/2015:07:18:58 -0400] “GET /robots.txt HTTP/1.1″ 200 0 “-” “Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)“

- 249.78.17 refers to the hostname or IP address

- [13/Jul/2022:07:18:58 -0400] refers to the date and time the request was made

- GET is the HTTP method used (essentially the type of request that was made)

- /robots.txt is the path that was requested

- HTTP/1.1 is the protocol version used when responding to the requester

- 200 is the response status code

- 665 refers to the number of bytes transferred

In the example above, we can see the request comes from a user agent that purports to be Googlebot.

However, this is not guaranteed because user agents can be faked, making the data unreliable. We have to rely on other information to validate the requests.

The key identification section of a logline is the hostname or IP address. Using that information, we can easily run a DNS lookup followed by a forward DNS lookup on the accessing IP address and domain names.

As server log files tend to be very large, it’s extremely time-consuming to verify all these bots manually.

To speed up the process, we have written a simple perl script to do this for us, while converting it to CSV simultaneously so we can run further analysis.

To use this script, you can simply run the following command, and the output will be a CSV that contains a list of verified Googlebot accesses:

perl GoogleAccessLog2CSV.pl serverfile.log > verified_googlebot_log_file.csv

You can also get a file that includes invalid log lines by running the following command:

perl GoogleAccessLog2CSV.pl < serverfile.log > verified_googlebot_log_file.csv 2> invalid_log_lines.txt

Why are log files useful to us?

The data stored in a log file is useful when troubleshooting, as it can show when an error occurred, but its value for technical SEO should not be overlooked either.

Missing pages

Sometimes Google may be unable to crawl pages. This can be due to robots.txt blocking it from accessing a section of the site, or possibly something like poor internal linking or a noindex tag reducing the value of a URL to Google.

If a section of the site is consistently missing from your server logs, this should be considered a big red flag, and is likely due to Google having trouble accessing that URL.

If the logs show Googlebot is avoiding your site entirely, a good place to start off would be robots.txt.

A common but potentially devastating mistake is forgetting to remove a robots.txt Disallow (disallow all) line when pushing a site from staging to production.

If you’re not seeing any data from a specific search robot in your server logs, it’s worth checking your robots.txt file to ensure you haven’t accidentally blocked them across your entire server.

Crawl budget issues

When a search engine crawls your website, it allocates a ‘crawl budget’ — the based crawl capacity limit and crawl demand.

This means the crawl budget can be influenced by a number of things ranging from the popularity of your individual pages and the time it takes those pages to load, to Google’s own resources.

However, the crawl budget can ostensibly be simplified down to the number of pages Google will crawl before going elsewhere.

The internet is vast and, with some websites hosting many thousands of pages, crawl budgets are a way for search engine bots to avoid getting stuck on one site for a long period.

In addition, a crawl budget acts as Google’s safeguard against websites with setups that result in infinite crawling, such as misconfigured URL parameters or endless pagination.

We can use server logs to identify where Googlebot spends the majority of its time and whether the crawl budget is being wasted on areas of the site that are broken or of no value.

If we see Googlebot spending time in an area of the site with value to users but not bots, we could utilise robots.txt to block that section of the site to (well-behaved) bots.

Non-200 pages

If Googlebot is wasting a lot of time on non-200 pages, this is likely a sign some housekeeping is in order.

It’s fairly common for sites to have some internal links to pages that no longer exist and which either redirect or 404.

However, if a large site has not updated internal links to point to the new location and redirects and 404s have accumulated, it can begin to impact crawl budget.

This is because Googlebot will spend twice the time trying to access the site’s content.

Crawl to traffic delay

One final way to use server logs for SEO is to look at the first crawl date for a page and compare it against your analytics data to see when organic traffic started to arrive.

If your website is quite consistent in this regard, you can start to factor this lag into your seasonal SEO campaigns, so you publish content early enough for users to find. This is especially useful before an event like Christmas takes place.

Combining data from multiple platforms

To dive deeper into server log analysis, the data can be used to export your data into appropriate data analysis tools such as Google Data Studio.

Here you can have a separate column for each parameter and apply formatting, formulae and analysis.

You can incorporate reports from other platforms too, such as website analytics, SEO tools. Don’t forget to compare your data against a recent site crawl, as this can help you connect the dots on how Google is crawling.

Knowing how to process all this data is relatively advanced, but it can be a powerful way to gain an in-depth technical overview of your server, website and SEO campaigns.

Conduct regular audits

SEO is not a one-time-only task. Maintaining a good search presence means publishing new content over time, building your website’s number of crawled and indexed pages, and responding to competitors publishing optimised content of their own.

As your website grows, and especially if you make changes to existing content, the likelihood of crawl errors increases too.

Server log analysis for SEO is a way to spot those errors and optimise the way search robots crawl new content on your site, so valuable pages aren’t missed during the next round of indexing.

Make it a regular admin task to check your server log data and take any necessary actions to protect your SEO value across your website.

If you’re in any doubt about how to proceed, consult a technical SEO expert.